Although I strolled into class a few minutes late, I was able to catch the remainder of the lecture presented by Indiana University’s Makerspace director. It’s really cool to see more areas conducive in instilling a sense of creativity within students open up in colleges around the nation. After the lecture, I was left with an immense sense of pride in the University of Illinois for creating the world’s first business school 3D printing lab. A place in which I, along with many of my fellow Illini, have utilized and continue to do so as a creative hub for various projects and personal endeavors. Particularly, I got to hear how my classmates have used the MakerLab and other resources on campus to help bring their projects to life. I was astonished by the creativity, ingenuity, and passion behind each groups’ progress report.

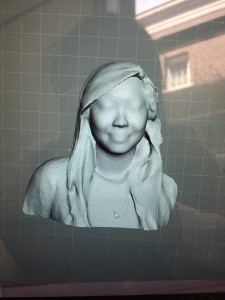

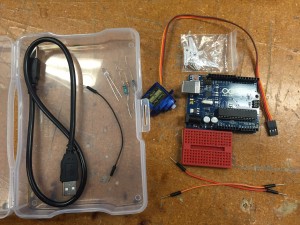

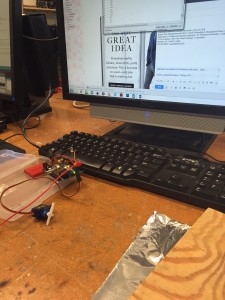

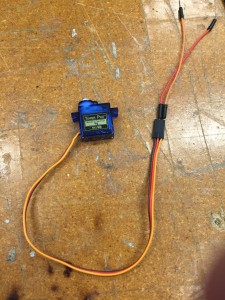

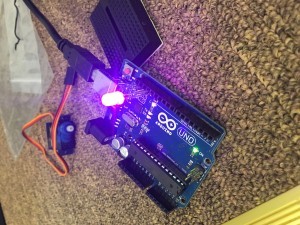

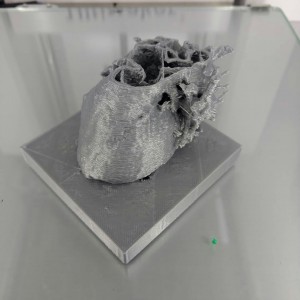

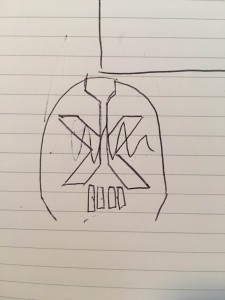

After each group presented a snippet of their project, we were left to stay in the MakerLab, go to the FabLab, or utilize any other resource to help aide the progression of our project. Since the Arduino is the base of our product, we went to the FabLab. During our time at the FabLab, we learned a lot more about the functionality of the ukulele tuner and made slight adjustments to our existing project. As Annie was heavily researching the Arduino facet of our project, I assisted Johnny in creating the outer piece of the tuner on Fusion 360. The process was quite tricky, but we are now one step closer to where we want to be in terms of design.

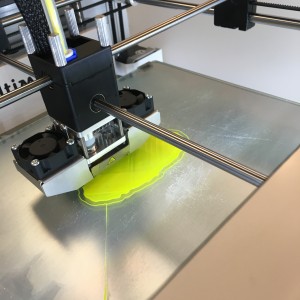

In the near future, I look forward to 3D printing the outer piece of the tuner and seeing it completed! Not to mention, I am beyond pumped to see my fellow classmates’ finished products. Also, I hope to continue utilizing the MakerLab and other resources on campus for future projects. Lastly, after having slight difficulty navigating Fusion 360, I want to create more objects to become fully proficient with the software.

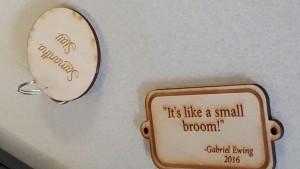

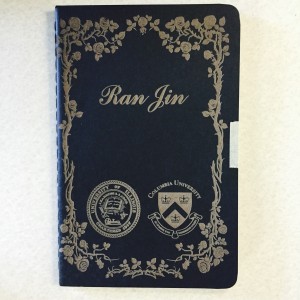

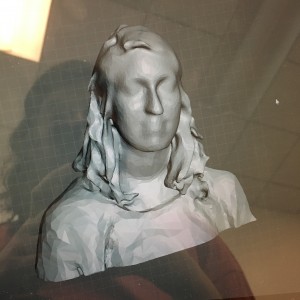

(front view)

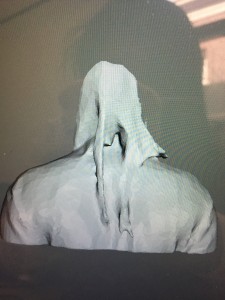

(front view) (side view)

(side view)