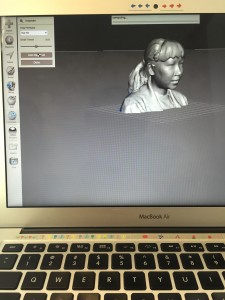

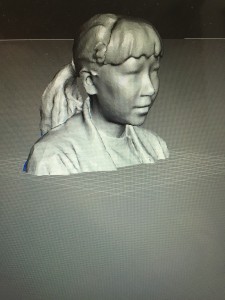

Week 11 was an exciting one for the digital makers, as it marked the last time we would be learning a new technology in our makerspace, the Digital Making Lab in the BIF. Week 11 was dedicated to using Meshmixer software in conjunction with two types of 3D sense scanners, the Sense 3D Scanner as well as the iPad mounted Structure Sensor. Some of the members of the course had a previous exposure to this technology during our build-a-printer event, however, it was a new learning experience for the majority of us. The scanner I used was the Structure Sensor, and it was rather intuitive to use. My partner simply did a 360 degree capture of my torso as I struck a pose. The scan was almost perfect, and the imperfections could be touched up in Meshmixer later. From there, I used the app connected to the scanner to e-mail the raw sensor data to myself.

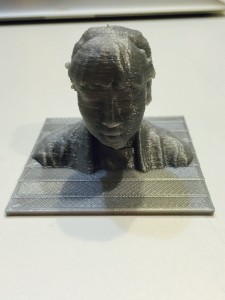

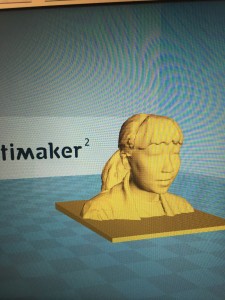

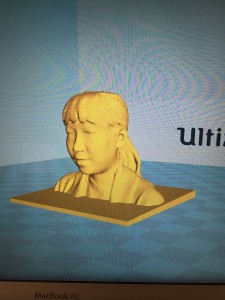

Next comes the Meshmixing. This software by Autodesk allowed us to clean up the raw scanner data by patching any holes and smoothing strange bumps in our scan. There were a number of meshmixing tools that allowed us to do this, and Arielle, our guest speaker, was able to walk us through any issues. Meshmixer also allowed us to add additional shapes onto our scans, such as a base for our busts. After smoothing out of busts, we then saved them as .stl files, and transferred the files to an SD card so they could be loaded into our 3D printers. Most of the students prints took less than an hour.

Additionally, Arielle Rausin came in once again to speak to our class. She has had experience using the SenseScanner in her Digital Making project last year. Her project is one of the most successful projects to come out of UIUC’s makerspace. Last year, Arielle used one of Beckman’s Institutes scanners to scan and print a model of her wheelchair racing glove. By recreating the glove with 3D printer filament, Arielle was able to create a lightweight version of her glove, that was also more injury resistant. You can read more about her story at the following blog from last year’s digital making class: http://makerlab.illinois.edu/2015/06/09/meet-the-maker-arielle-rausin/

Additionally, her story has even made it to the new 3D printing course on Coursera. Here is a more recent interview from Arielle. It definitely inspiring to our class to hear how own projects can actually make a difference in the real world! There is even a group of students this semester working on an improvement to Arielle’s model.

Based on my classmates reflection posts, it was clear that we all saw a great deal of potential for this canning technology. By using the scanners, any real-life object could be reverse engineered into a file that could be manipulated. The process of reverse engineering could be applied to a number of sciences that want to create models of things they want to study. One particular story that jumped out to me was from the Geomagic Community Case Studies. This blog talks about how archeologist have used similar scanning technology to study the Easter Islands heads, one of the biggest mysteries of the archeological community.

The students of our class had many interesting ways to describe how the 3D scanners worked. A few students compared the scanners to Microsoft’s Xbox Kinect. Paige mentioned that printing her bust felt like she was being carved into Mount Rushmore, which I would pretty funny. Many students mentioned that getting the initial scan was rather easy, and that the meshmixing was the harder part of the day’s lesson. Specifically, meshmixing the base to our busts proved to be rather troublesome. Once that hurdle was overcome, printing our busts was a matter of hooking our files up to the Ultimakers and printing, something that has come as second nature to us at this point in the semester.

I found this software very useful for future scanning. I once received a 3D printed version of myself during a 3D printing expo held in BIF and just remember it looked like a very pixelated version of me. As if I was being carved into Mount Rushmore. With MeshMixer I can actually smooth out rough edges like my ears, nose, and stray hairs that the scanner picked up. MeshMixer simply allows us to create more accurate versions of our scans or do something completely crazy like combine body parts of different animals. Still not sure why that was a necessary feature someone thought to throw in there, but it definitely showcases MeshMixer’s capabilities.

I found this software very useful for future scanning. I once received a 3D printed version of myself during a 3D printing expo held in BIF and just remember it looked like a very pixelated version of me. As if I was being carved into Mount Rushmore. With MeshMixer I can actually smooth out rough edges like my ears, nose, and stray hairs that the scanner picked up. MeshMixer simply allows us to create more accurate versions of our scans or do something completely crazy like combine body parts of different animals. Still not sure why that was a necessary feature someone thought to throw in there, but it definitely showcases MeshMixer’s capabilities.