Have you ever wondered what it would sound like to listen through sixteen ears? This past March, hundreds of Central Illinois children and families experienced microphone-array augmented listening technology firsthand at the annual Engineering Open House (EOH) sponsored by the University of Illinois College of Engineering. At the event, which attracts thousands of elementary-, middle-, and high-school students and local community members, visitors learned about technologies for enhancing human and machine listening.

Listen up (or down): The technology of directional listening

Our team’s award-winning exhibit introduced visitors to several directional listening technologies, which enhance audio by isolating sounds that come from a certain direction. Directional listening is important when the sounds we want to hear are far away, or when there are many different sounds coming from different directions—like at a crowded open house! There are two ways to focus on sounds from one direction: we can physically block sounds from directions we don’t want, or we can use the mathematical tools of signal processing to cancel out those unwanted sounds. At our exhibit in Engineering Hall, visitors could try both.

This carefully designed mechanical listening device is definitely not an oil funnel from the local hardware store.

The oldest and most intuitive listening technology is the ear horn, pictured above. This horn literally funnels sound waves from the direction in which it is pointed. The effect is surprisingly strong, and there is a noticeable difference in the acoustics of the two horns we had on display. The shape of the horn affects both its directional pattern and its effect on different sound wavelengths, which humans perceive as pitch. The toy listening dish shown below operates on the same principle, but also includes an electronic amplifier. The funnels work much better for directional listening, but the spy gadget is the clear winner for style.

This toy listening dish is not very powerful, but it certainly looks cool!

These mechanical hearing aids rely on physical acoustics to isolate sound from one direction. To listen in a different direction, the user needs to physically turn them in that direction. Modern directional listening technology uses microphone arrays, which are groups of microphones spread apart from each other in space. We can use signal processing to compare and combine the signals recorded by the microphones to tell what direction a sound came from or to listen in a certain direction. We can change the direction using software, without physically moving the microphones. With sophisticated array signal processing, we can even listen in multiple directions at once, and can compensate for reflections and echoes in the room.

Alexander: A head-turning acoustic localization demo

One of the best-known products that uses a microphone array is the Amazon Echo, a smart speaker powered by the Alexa voice assistant. Whenever a user says “Alexa”, the speaker will light up in the direction of the sound, then listen for a question or a command. Because it knows where the user is, it can block out other noise in the room and better understand the question. In our demonstration of acoustic localization—using an array to tell where a sound is coming from—we decided that a light wasn’t dramatic enough. Our upgraded assistant, named Alexander, turns his head to look at you when you talk to him! He was designed by sophomores Manan Mittal and Bryce Tharp.

Alexander turns to look at exhibit visitors when they talk.

Alexander is powered by the Matrix Creator, a development board that features an array of seven microphones in the same configuration used in the first-generation Amazon Echo. When you talk to Alexander, your voice will reach different microphones at different times. From the closest microphone to the farthest, the time delay is about 0.2 milliseconds, or 1/5000 of a second. A processor connected to the microphones uses these tiny time differences to calculate which direction the sound came from. A small motor called a servo then rotates the head to look in the talker’s direction. Visitors were understandably terrified when a faceless head snapped around to stare at them, so we dressed him up in sunglasses and a hat to make him seem friendlier. Still, perhaps there’s a good reason Amazon decided to go with a ring of lights.

The Sombrearo: A really big hearing aid

Our research team is studying ways to apply array processing, like the technology in the Echo and other smart speakers, to improve listening devices such as hearing aids. By listening through dozens or hundreds of microphones instead of just two ears, humans could hear better in crowded, noisy places.

Visitors listen through the “Sombrearo” listening system with an array of sixteen microphones.

As it happens, our EOH exhibit booth was a crowded, noisy place, so it was the perfect spot to show off our technology. We built an array of sixteen microphones mounted on a large hat known as the “Sombrearo.” To avoid the hassle of putting the giant hat on each visitor, we put it on our mannequin, Mike A. Ray, and placed two of the sixteen microphones near his ears. The microphones were connected to a computer that processed and combined the signals and played back sound through headphones; this process is often called beamforming. The beamformer was specially designed so that the left output of the headphones corresponded to the microphone in the left ear and the right output of the headphones corresponded to the microphone in the right ear, preserving the listener’s sense of spatial awareness.

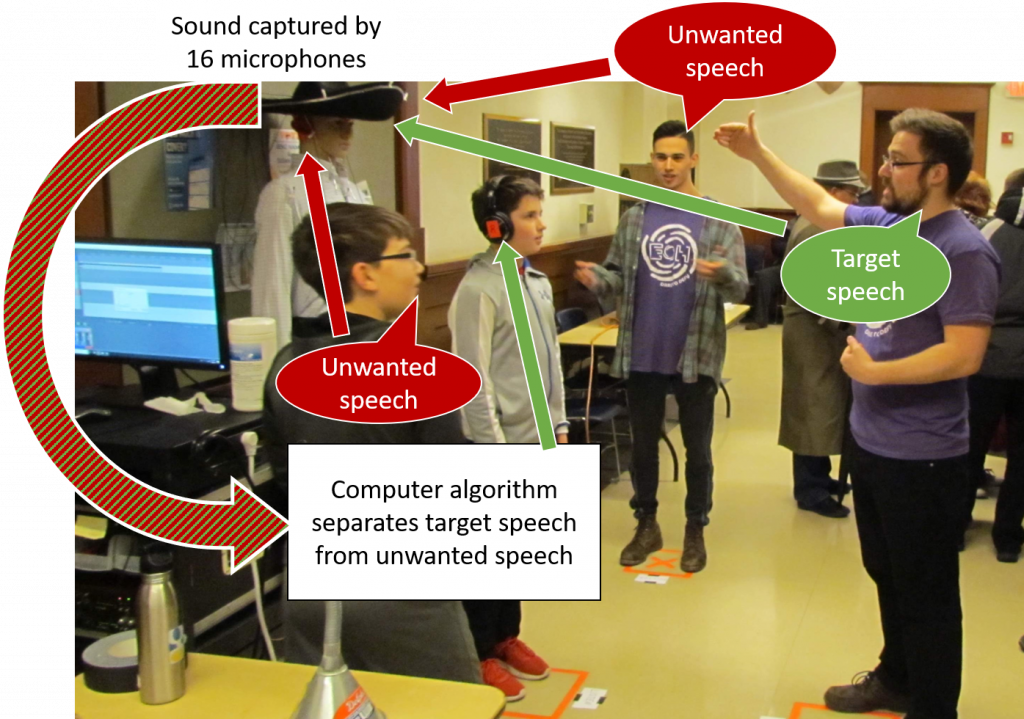

The listening system isolates sound from the talker standing in front and cancels out sound from the talkers on the left and right.

This interactive demo required four people: one to listen through the Sombrearo and three to stand in specially marked locations and talk. The beamformer was designed so that the person in front would be heard clearly, the two talkers on the left and right sides would be silenced, and all the other noise in the room would be reduced as much as possible. Since our visitors tended to be shy, we had to do most of the talking; it was unexpectedly frustrating to stand in the left and right boxes and talk continuously knowing that we wouldn’t be heard! Fortunately, there was plenty of ambient noise from the other exhibits nearby.

Because the hallway of Engineering Hall has strong echoes, a simple directional beamformer would not work well: the sound from the left and right talkers would bounce off the walls and reach the array from multiple angles at once. The signal processing methods we use are more advanced than conventional beamforming and can explicitly account for those echoes when amplifying or canceling out sounds. We used special test sounds to measure the acoustics of the array from those three spots in the room. We also measured the ambient noise, which came mostly from the long hallway to the listener’s left.

Visitors’ impressions of the exhibit reflected the strengths and weaknesses of beamforming for listening devices today. Most agreed that the left and right talkers were strongly attenuated while the talker in front was clear. There were issues, however. Because the computer software we used was not designed for ultra-low-latency processing, there was a noticeable delay between outside sounds and the sound played through the headphones. Delay is critical for any listening device; in a purpose-built real-time processing system, it could be made imperceptibly low. Another challenge was distortion of background noise. While the three locations for which we calibrated the system were processed correctly, noise from down the hall was sometimes reduced, sometimes amplified, and often distorted. Ensuring that background sounds are processed consistently is a major challenge for researchers. We expect that large arrays using dozens of microphones will help because they can focus on more sounds at the same time.

Guitar effects: Audio processing with programmable logic

To translate our listening enhancement system from a large computer to a wearable device, we are developing a platform using powerful hardware known as a field programmable gate array (FPGA). Unlike computers or microcontrollers, which run software code, FPGA designs are implemented in hardware logic. They’re fast, powerful, have almost no added delay, and can be scaled to large numbers of microphones, but are notoriously difficult to work with. Our team has been working with the Intel DE1-SoC development board, which combines programmable logic with an Arm processor like those used in mobile phones.

Visitors try out different guitar sound effects implemented on an FPGA system.

Our FPGA listening device is not ready yet, but to demonstrate the power of audio processing on FPGAs, senior Haige Chen designed a set of audio effects for a guitar. Visitors could play Haige’s guitar and hear different effects by flipping switches on the device. These included clipping distortion, echo, and reverb. The reverb effect uses the same basic math as our beamforming system: it adds scaled and delayed copies of the signals together.

Thanks to Haige Chen, Uriah Jones, Sooraj Kumar, JJ Martinez-Villalba, Manan Mittal, Ben Stoehr, Bryce Tharp, and Naoki Tsuda for helping to prepare and present our successful 2019 Engineering Open House exhibit! This exhibit was supported in part by grants from Microsoft Research and the Amazon Alexa Innovation Fund.