This post describes our paper “Mechatronic Generation of Datasets for Acoustics Research,” presented at the International Workshop on Acoustic Signal Enhancement (IWAENC) in September 2022.

Creating datasets is expensive, be it in terms of time or funding. This is especially true for spatial audio: Some applications require that hundreds of recordings are taken from specific regions in a room, while others involve arranging many microphones and loudspeakers to mimic real-life scenarios – for instance, a conference. Few researchers have access to dedicated recording spaces that can accurately portray acoustically-interesting environments, and fewer still are able to create dynamic scenes where microphones and speakers move precisely to replicate how people walk and talk.

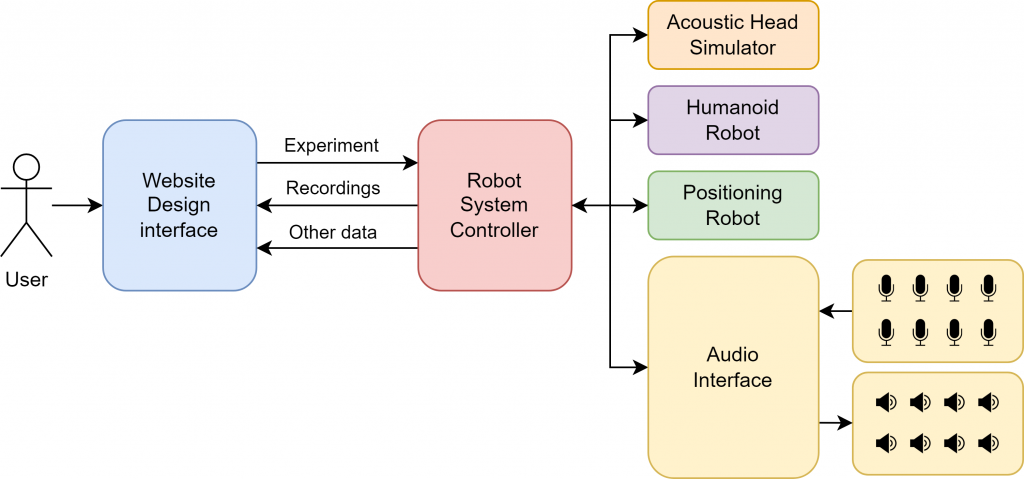

To support the creation of these types of datasets, we propose the Mechatronic Acoustic Research System, or MARS for short. We envision MARS as a robot-enabled recording space that researchers would have remote access to. Users could emulate a wide variety of acoustic environments and take recordings with little effort. Our initial concept is for a website design interface that can be used to specify a complicated experiment, which a robot system then automatically recreates.

How the MARS frontend and backend link together

The first step in developing such a tool is to show that robots and remote access are viable tools for research-grade audio data collection. We developed a simple scripting API in Python to coordinate the following devices:

- SpiderBot – a cable-driven parallel robot that accurately moves a payload to various positions and heights

- Linear Guide Rail – an actuated platform that slides along a rail

- Acoustic Head Simulator – a 3D-printed model head with two microphones in the ear canals and a speaker at the mouth, capable of rotation

- Audio Interface – a 64-in, 64-out audio interface that can be scripted for audio playback and recording

The SpiderBot carrying an example payload

We designed a custom control framework using the Robot Operating System, ROS, to offer synchronized motion and audio playback or recording. We then ran two types of experiment that are relevant to ongoing research in the acoustic signal processing field:

- Dense spatial sampling – the Acoustic Head Simulator was positioned by the Linear Guide Rail and rotated to various positions for recordings to be taken. This experiment ran autonomously over a span of multiple days

- Dynamic scenes – a loudspeaker was moved along a path by the SpiderBot for simultaneous motion and playback. Recordings were taken from the Acoustic Head Simulator

These experiments were each repeated multiple times, and the recordings were used to evaluate repeatability of the system, which was found to be near-ideal.

This initial proof-of-concept for MARS showed that an openly-accessible data collection workbench is within reach. Currently, we are developing many new robots to integrate with MARS, which will allow for a greater range of experiments. Our website frontend is under development, and will be the key component that connects users of our system to a full suite of mechanized recording spaces. The digital twin physics simulation, which is used in the frontend to visualize experiments and by the backend to validate experiments, is being extended to support the growing roster of devices. With the successful implementation of a MARS prototype, we have moved one step closer to offering custom audio data collection for all.

See our IWAENC 2022 paper where we discuss the proof-of-concept implementation in detail, introducing MARS to the research community for the first time.