This work was presented at the 2023 Undergraduate Research Symposium, held by the University of Illinois Urbana-Champaign (Poster 61)

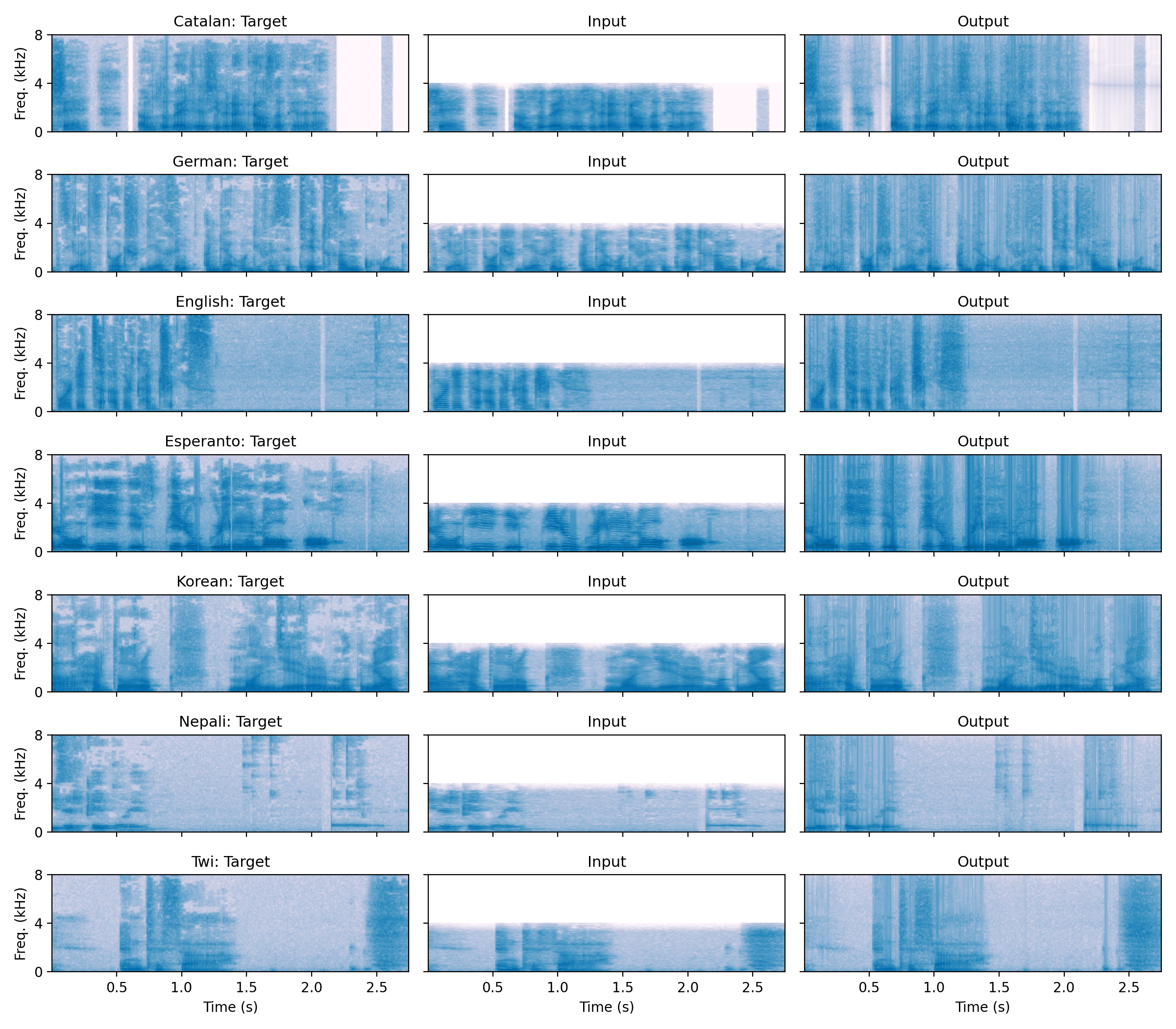

Speech audio sounds good when sampled at 16 kHz, however legacy infrastructure and certain microphones can only capture 8 kHz audio. This can significantly reduce the perceived clarity and intelligibility of speech.

Deep learning provides a way to estimate the lost frequency components, thereby improving quality. This task is called audio super-resolution (or bandwidth extension).

Typically, large datasets of clean audio are required to train such models. However, these datasets do not sufficiently represent all languages, and it is not always possible to train language-specific models for any given language. We investigate how a model trained only on high-quality English recordings generalizes to lower-quality recordings of unseen languages.

The specifics of our model are discussed in our poster at the Undergraduate Research Symposium. Here, we present some results and audio.

We find that our model generalizes well to certain languages, but not others. We provide example audio in the table below. Languages are listed by the attained model accuracy, with English being the most accurate and Catalan being the least so.

| Target (16 kHz) | Input (8 kHz) | Output (16 kHz) | |

|---|---|---|---|

| English | |||

| Korean | |||

| Twi | |||

| German | |||

| Nepali | |||

| Esperanto | |||

| Catalan |

We conjecture that the variance in performance is correlated with the linguistic similarity between the trained English and inference languages. We reserve this analysis for future work.

*Target audio samples are from the Common Voices Corpus, which contains recordings of over 100 languages