Have you ever wondered what it would sound like to listen through sixteen ears? This past March, hundreds of Central Illinois children and families experienced microphone-array augmented listening technology firsthand at the annual Engineering Open House (EOH) sponsored by the University of Illinois College of Engineering. At the event, which attracts thousands of elementary-, middle-, and high-school students and local community members, visitors learned about technologies for enhancing human and machine listening.

Listen up (or down): The technology of directional listening

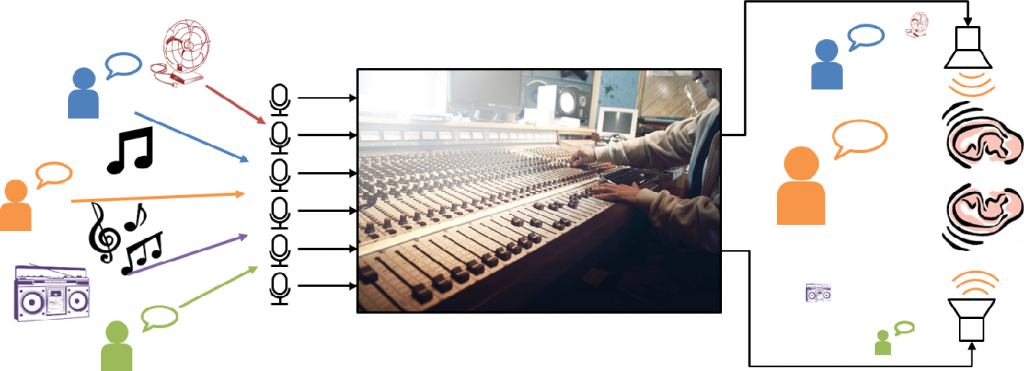

Our team’s award-winning exhibit introduced visitors to several directional listening technologies, which enhance audio by isolating sounds that come from a certain direction. Directional listening is important when the sounds we want to hear are far away, or when there are many different sounds coming from different directions—like at a crowded open house! There are two ways to focus on sounds from one direction: we can physically block sounds from directions we don’t want, or we can use the mathematical tools of signal processing to cancel out those unwanted sounds. At our exhibit in Engineering Hall, visitors could try both.

This carefully designed mechanical listening device is definitely not an oil funnel from the local hardware store.

The oldest and most intuitive listening technology is the ear horn, pictured above. This horn literally funnels sound waves from the direction in which it is pointed. The effect is surprisingly strong, and there is a noticeable difference in the acoustics of the two horns we had on display. The shape of the horn affects both its directional pattern and its effect on different sound wavelengths, which humans perceive as pitch. The toy listening dish shown below operates on the same principle, but also includes an electronic amplifier. The funnels work much better for directional listening, but the spy gadget is the clear winner for style.

This toy listening dish is not very powerful, but it certainly looks cool!

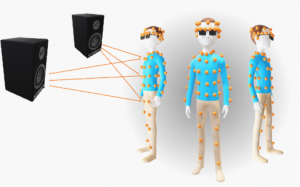

These mechanical hearing aids rely on physical acoustics to isolate sound from one direction. To listen in a different direction, the user needs to physically turn them in that direction. Modern directional listening technology uses microphone arrays, which are groups of microphones spread apart from each other in space. We can use signal processing to compare and combine the signals recorded by the microphones to tell what direction a sound came from or to listen in a certain direction. We can change the direction using software, without physically moving the microphones. With sophisticated array signal processing, we can even listen in multiple directions at once, and can compensate for reflections and echoes in the room.