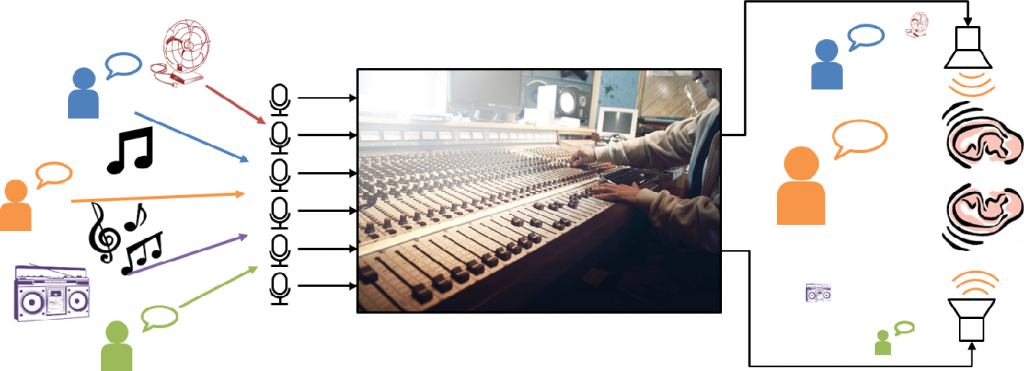

Augmented listening systems “remix” the sounds we perceive around us, making some louder and some quieter.

I am one of millions of people who suffer from hearing loss. For my entire life I’ve known the frustration of asking people to repeat themselves, struggling to communicate over the phone, and skipping social events because I know they’ll be too noisy. Hearing aids do help, but they don’t work well in the noisy, crowded situations where I need them the most. That’s why I decided to devote my PhD thesis to improving the performance of hearing aids in noisy environments.

As my research progressed, I realized that this problem is not limited to hearing aids, and that the technologies I am developing could also help people who don’t suffer from hearing loss. Over the last few years, there has been rapid growth in a product category that I call augmented listening (AL): technologies that enhance human listening abilities by modifying the sounds they hear in real time. Augmented listening devices include:

- traditional hearing aids, which are prescribed by a clinician to patients with hearing loss;

- low-cost personal sound amplification products (PSAPs), which are ostensibly for normal-hearing listeners;

- advanced headphones, sometimes called “hearables,” that incorporate listening enhancement as well as features like heart-rate sensing; and

- augmented- and mixed-reality headsets, which supplement real-world sound with extra information.

These product categories have been converging in recent years as hearing aids add new consumer-technology features like Bluetooth and headphone products promise to enhance real-world sounds. Recent regulatory changes that allow hearing aids to be sold over the counter will also help to shake up the market.

Enhancing human perception

Augmented listening devices can change a listener’s perception of sound in several ways. The most basic type of processing, common to even the cheapest generic hearing aids, is amplification, or making sounds louder. Most prescription hearing aids have different amplification settings at different frequencies based on an individual’s hearing loss. Typically, high frequencies are amplified more than low frequencies. \ Advanced hearing aids also feature dynamic range compression, which changes amplification settings over time so that quiet sounds are boosted more than loud sounds. Compression is important because it protects listeners from loud sounds and helps to correct for the reduced dynamic range of hearing-impaired ears.

The environments in which it is hardest to hear—with or without hearing loss—are noisy, reverberant rooms with many people talking at once. Thus, a useful augmented listening system should help users to focus on the sounds they want to hear, like speech from a conversation partner, and tune out everything else, like background babble and mechanical noise. Noise-canceling headphones are good at blocking steady, predictable noise like airplane engines and air conditioners, and modern hearing aids do a good job at canceling feedback and wind noise. But the goal of separating one human voice from a crowd of other human voices remains elusive—despite what hearing aid marketing materials would lead you to believe!

Ideally, an AL system would work like the mixing board in a music studio: each sound source would have its own slider, and the user—or an algorithm—would decide how much of each source should end up in the final mix. The system would need to identify which sounds are coming from what source, what kinds of sounds they are, and whether the user should hear more or less of them. It could also apply separate processing to each source: different equalization and compression settings for music and speech, for example, or the holy grail of audio augmented reality, real-time audio translation from one language to another.

Augmented listening challenges

There is no hearing aid or consumer gadget on the market today that can do this kind of real-time remixing. There are several unsolved research problems that must be addressed first. The system would need to decide how many sound sources are present, what kind of sounds they are, and where they are in the room; this problem is known as auditory scene analysis. It would also need to decide how much of each source the user should hear, which is an open question for hearing and psychoacoustics researchers. Next, it would need to separate the different sound sources captured by the microphones, process them, and put them back together, a process known as source separation or source remixing. That signal processing challenge is the focus of our research group.

Source separation and remixing are more difficult for human augmented listening applications than they are for machine listening applications such as speech recognition, which get more attention from researchers. Listening devices must process sound sources in real time, with no more than a few milliseconds of delay between the microphone and the ear to avoid disturbing distortion or echoes. To ensure that the sounds coming out of the earpiece seem natural to the listener, the frequency content shouldn’t change too much and there shouldn’t be any processing artifacts like clicks or pops. Finally, AL systems must preserve the spatial cues of the sound sources, such as time delays and loudness differences between the left and right ears, so that the listener can tell what sounds are coming from what directions.

Array processing for augmented listening

Separating sound sources is difficult, and doing it in real time with imperceptible delay and distortion is a truly daunting task. Even humans have trouble hearing in crowded rooms, after all. Fortunately, while humans are limited to just two ears, listening devices can have dozens or even hundreds of microphones. Microphone arrays use subtle time-of-flight differences between signals at different sensors to determine what sounds are coming from what directions, track them as they move, and separate the signals spatially.

Array technology is commonly used in audio conferencing equipment and smart speakers to focus on one person’s voice from across the room. The Amazon Echo has seven microphones, for example, and the Microsoft Kinect has four. Many commercial hearing aids and other AL devices also have multiple microphones, usually just two spaced a few millimeters apart. These arrays are too small to help separate sounds in crowded rooms.

We believe that using large arrays spread across the body or around a room could help to analyze complex sound scenes, track sources and users as they move, and reduce the delay and distortion constraints that hold back listening devices today. We are studying these listening-specific constraints, developing new theory and algorithms for augmented listening, and building prototypes and data sets to demonstrate the potential of microphone arrays to improve human listening. Humans are already surrounded by dozens of microphones; we hope that by leveraging these microphones to spatially analyze and process sounds, we can help everyone to hear better even in the noisiest environments.