This post describes our new wearable microphone impulse response data set, which is available for download from the Illinois Data Bank and is the subject of a paper at ICASSP 2019.

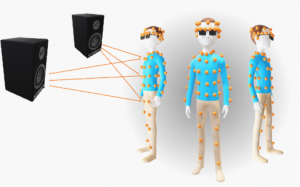

Acoustic impulse responses were measured from 24 source angles to 80 points across the body.

Have you ever been at a crowded party and struggled to hear the person next to you? Crowded, noisy places are some of the most difficult listening environments, especially for people with hearing loss. Noisy rooms are also a challenge for electronic listening systems, like teleconferencing equipment and smart speakers that recognize users’ voices. That’s why many conference room microphones and smart speakers use as many as eight microphones instead of just one or two. These arrays of microphones, which are usually laid out in a regular pattern like a circle, let the device focus on sounds coming from one direction and block out other sounds. Arrays work like camera lenses: larger lenses can focus light more narrowly, and arrays with more microphones spread out over a larger area can better distinguish between sounds from different directions.

Wearable microphone arrays

Microphone arrays are also sometimes used in listening devices, including hearing aids and the emerging product category of smart headphones. These array-equipped devices can help users to tune out annoying sounds and focus on what they want to hear. Unfortunately, most hearing aids only have two microphones spaced a few millimeters apart, so they aren’t very good at focusing in one direction. What if hearing aids—or smart headphones, or augmented reality headsets—had a dozen microphones instead of just two? What if they had one hundred microphones spread all over the user’s body, attached to their clothing and accessories? In principle, a large wearable array could provide far better sound quality than listening devices today.

Over the years, there have been several papers about wearable arrays: vests, necklaces, eyeglasses, helmets. It’s also a popular idea on crowdfunding websites. But there have been no commercially successful wearable microphone array products. Although several engineers have built these arrays, no one has rigorously studied their design tradeoffs. How many microphones do we need? How far apart should they be? Does it matter what clothes the user is wearing? How much better are they than conventional listening devices? We developed a new data set to help researchers answer these questions and to explore the possibilities of wearable microphone arrays.

Collecting the data

Our new data set contains over 8000 acoustic impulse responses (AIR), mathematical functions that describe how sound propagates from one point to another. There is a different AIR for each pair of sound source (human talker, loudspeaker, air conditioner, ukulele, etc.) and microphone. There are two reasons for measuring acoustic impulse responses: first, if we know all the AIRs from every source to every microphone, then we can use the signals captured by the microphones to figure out which sound came from which source. It’s just like solving a system of equations, except that the equations also account for echoes and reverberation in the room. Second, AIRs let us simulate the signals we would have recorded with any combination of microphones and sound sources. We can take sound that we’ve recorded in advance—preferably in an anechoic chamber, but a quiet room will work too—and calculate what it would have sounded like to each microphone if we’d played it over our loudspeakers. That way, researchers around the world can do experiments using our laboratory setup without having to build their own wearable arrays.

To measure the acoustic impulse responses, Ryan Corey and Naoki Tsuda set up loudspeakers in a ring around the microphone array user. Each loudspeaker plays back a test signal that is recorded by all the microphones in the array. The test signal is a linear frequency sweep, also known as a chirp, that covers all the frequencies that humans can hear. From the recordings, we can calculate the AIRs for each speaker-microphone pair. The equipment in our lab can record from 16 microphones at once, so we moved the microphones several times to record different parts of the body. The data set has 80 microphone positions on the body, another 80 on wearable accessories such as hats and headphones, and both human and mannequin test subjects. We also captured AIRs with different types of clothing.

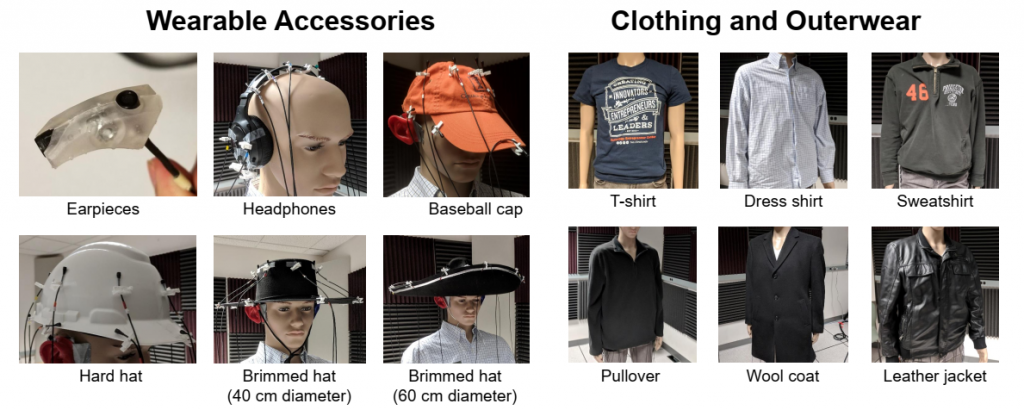

Microphones were affixed to various wearable accessories and were also tested under different layers of clothing.

In total, we recorded almost 80 GB of raw audio data! Fortunately, the calculated impulse responses are only about 100 MB. The data set and documentation are archived on the Illinois Data Bank, a long-term data repository maintained by the University of Illinois Library. It is distributed under a Creative Commons Attribution (CC-BY) license, which means that anyone can use and modify it for free if they acknowledge the source.

The microphones were placed where people are likely to wear electronic devices. There were three microphones on each ear: one just outside the ear canal, to help reproduce sound as a person would hear it, and two in a 3D-printed behind-the-ear earpiece made by industrial design student Uriah Jones. Another ten microphones were clipped to a pair of glasses—microphone array glasses are a perennial favorite on crowdfunding sites—and eight each were placed in rings around the forehead and neck. The remaining 48 microphones were spread across the torso, arms, and legs.

Analyzing the results

One challenge of doing research on wearable microphone arrays is that human subjects are difficult to work with: we have to fill out paperwork and pay them for their time, they can never hold perfectly still or say the same thing exactly the same way twice, and we can’t disassemble them for storage or run wires inside their limbs. One goal of this project was to determine whether we can use plastic mannequins as a human analogue. Mannequins work for free and don’t get annoyed listening to chirps all day, but do they have the same acoustic effects as real human bodies? Our results suggest that they do! The attenuation of human and mannequin torsos is similar within a few decibels. The least realistic part of the plastic mannequin is its head; fortunately, there are plentiful existing data sets of head-related acoustic impulse responses from real humans and high-quality acoustic head dummies.

Another complication of wearable microphone arrays is clothing. Microphone technology is advancing quickly, and one could imagine acoustic sensors that can be easily integrated into clothing and accessories. How well would wearable microphone arrays work under layers of clothing? We measured AIRs to 16 microphones on the torso while they were covered with a cotton t-shirt, a cotton dress shirt, a cotton sweater, a polyester pullover, a wool coat, and a leather jacket. The coat and jacket both blocked high-frequency sound from reaching the microphones underneath, but the more breathable layers, including the heavy sweaters, did not. Since the most difficult listening environments are indoors, where users are unlikely to wear heavy jackets, clothing should not be a problem for wearable arrays.

So, how much benefit is there to using 80 microphones instead of just two or four? We compared the performance of different array configurations for isolating one talker out of a group of six simultaneous speech sources. A binaural two-microphone array cut unwanted noise in half (3 dB gain). The microphone-covered eyeglasses made the noise 15 times smaller (12 dB gain). And the full array of 80 microphones reduced background noise by a factor of 60 (18 dB gain)! Among the wearable accessories, the 60-cm brimmed hat (aka Sombrearo) worked the best and the headphones were the least effective.

Our experiments showed that large wearable arrays work much better than conventional hearing aid earpieces at reducing noise and isolating a single voice in a crowd. This new data set will help other researchers to explore new applications of array processing using wearable microphones. We hope that it will help to drive innovation in augmented listening technology for years to come!