I am delighted to announce that I have accepted a position as an assistant professor of Electrical and Computer Engineering at the University of Illinois Chicago! It is also a first-of-its-kind dual appointment with the Discovery Partners Institute, a new University of Illinois innovation hub in Chicago dedicated to equitable economic development.

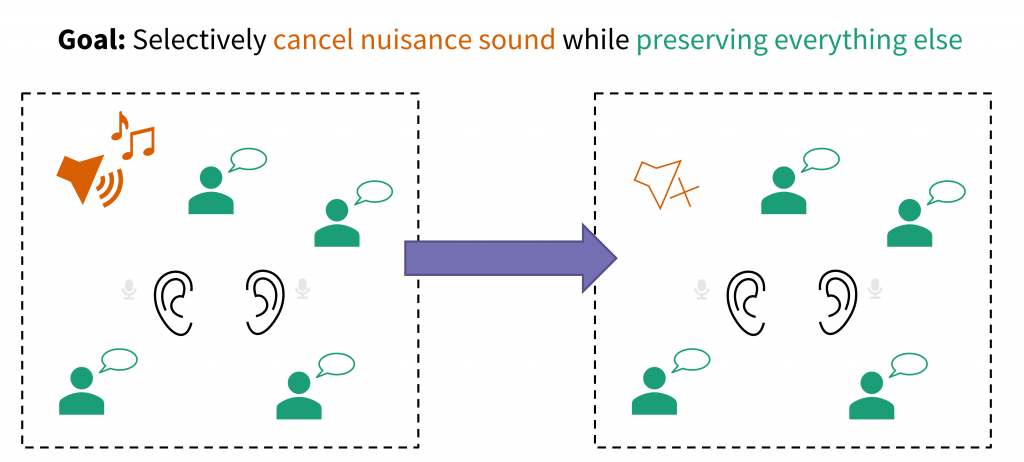

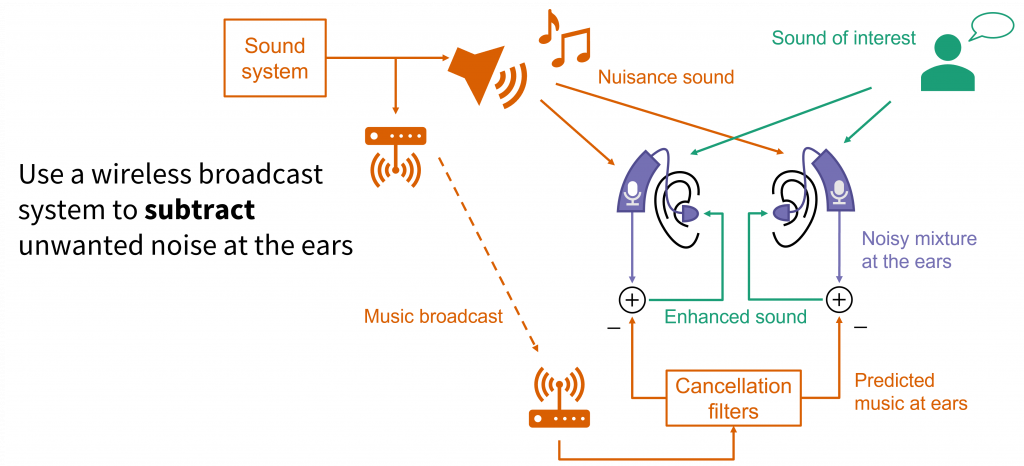

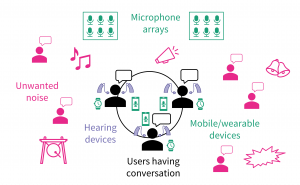

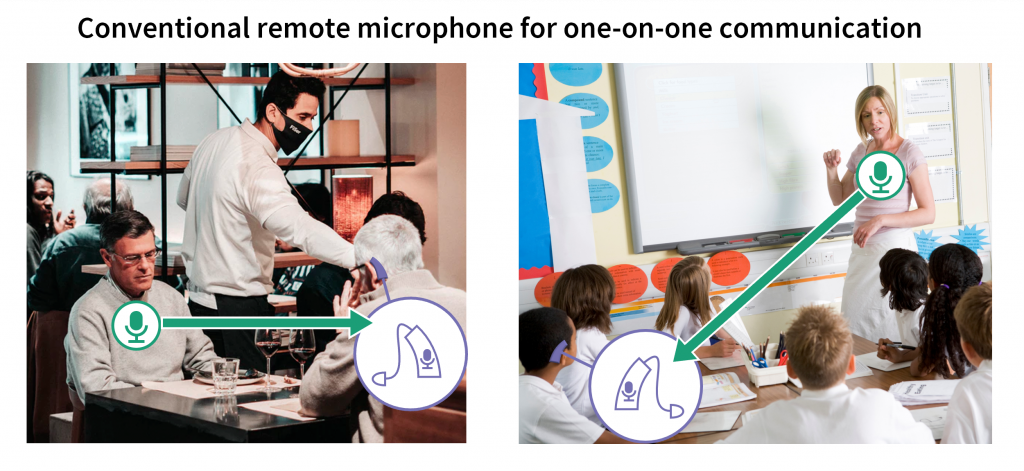

I look forward to expanding my research program on assistive and augmentative listening technology. As I establish my independent research career, I hope to build new collaborations with audiologists and hearing scientists to investigate the clinical applications of advanced audio technology. I will also collaborate with other scientists in the Applied Research and Development group at DPI on broader problems in human-centric computing, sensor networks, robotics, privacy, and accessible technology.

I am currently seeking a few talented and passionate PhD students to help launch my new research group at UIC and DPI. If you are interested, please get in touch!

Over the next few weeks, I will be transitioning this website to a new UIC laboratory page.