Sponsored by NVIDIA!

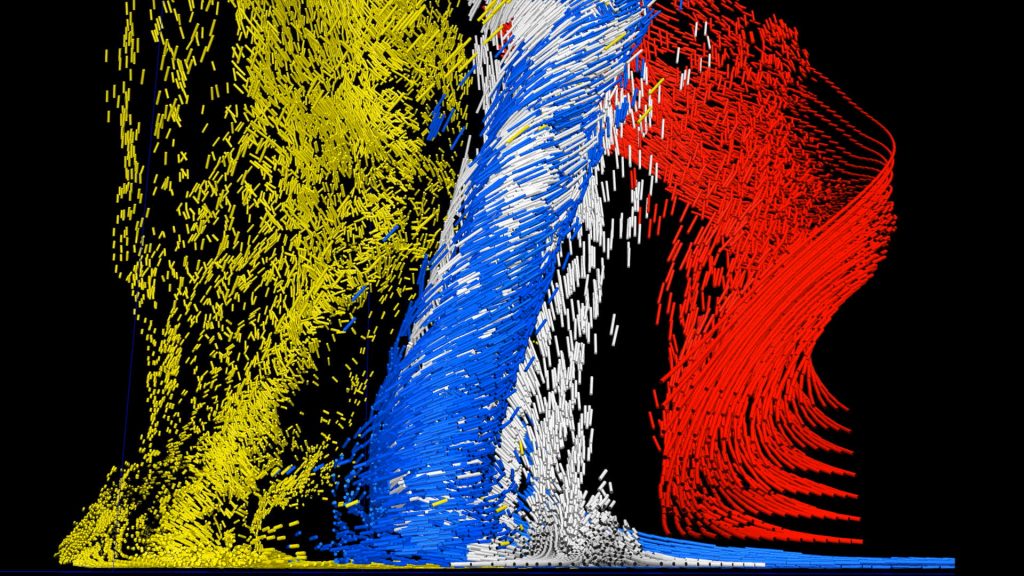

Blue Waters – a supercomputer housed at the University of Illinois – was used to produce the most detailed EF-5 tornado simulations to date in 2017.

The High-Performance Computing session will focus on research dedicated to designing software to take advantage of today’s increasingly powerful computational hardware. As we enter the era of exascale computing – an era in which processing power is expected to meet or surpass that of the human brain – developing code for science and engineering applications that fully and effectively exploits the massive amount of resources available is becoming increasingly vital, and research involving communication efficiency, performance modeling, GPU programming, and parallel algorithms sits firmly at the forefront of this effort. This session aims to include talks that highlight the importance of numerical and computational tools that address these areas.

Summit – a supercomputer housed at Oak Ridge National Laboratory – performed the first exascale calculation while analyzing genomic information in 2018.

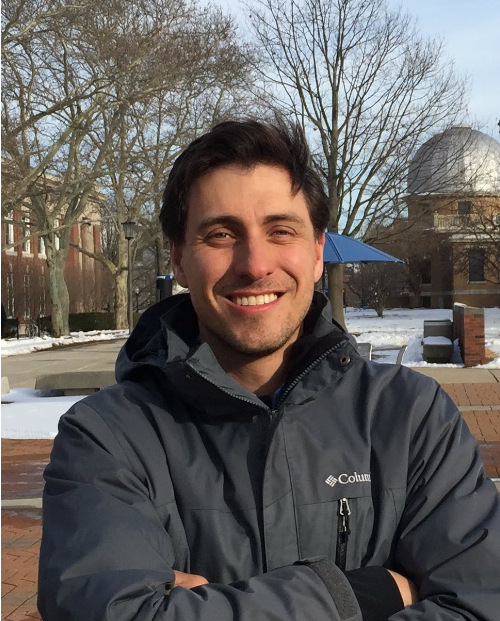

Keynote Speaker – Dr. Richard Vuduc, Computational Science and Engineering Department at Georgia Tech

Communication-avoiding Sparse Direct Solvers for Linear Systems and Graph Computations

Abstract:

Bio

Richard (Rich) Vuduc is a Professor at the Georgia Institute of Technology (“Georgia Tech”). He works in the School of Computational Science and Engineering, a department devoted to the study of computer-based modeling, simulation, and data-driven analysis of natural and engineered systems. His research lab, The HPC Garage (@hpcgarage), is interested in high-performance computing, with an emphasis on algorithms, performance analysis, and performance engineering. He is a recipient of a DARPA Computer Science Study Group grant; an NSF CAREER award; a collaborative Gordon Bell Prize in 2010; Lockheed-Martin Aeronautics Company Dean’s Award for Teaching Excellence (2013); and Best Paper or Best Student Paper Awards at the SIAM Conference on Data Mining (SDM, 2012), the IEEE Parallel and Distributed Processing Symposium (IPDPS, 2015), and the ACM/IEEE Conference on Supercomputing (SC, 2018), among others. He has also served as his department’s Associate Chair and Director of its graduate programs.

External to Georgia Tech, he currently serves as Chair of the SIAM Activity Group on Supercomputing (2018-2020); co-chaired the Technical Papers Program of the “Supercomputing” (SC) Conference in 2016; and serves as an associate editor of the ACM Transactions on Parallel Computing (TOPC) and the International Journal of High-Performance Computing Applications, and previously for the IEEE Transactions on Parallel and Distributed Systems. He received his Ph.D. in Computer Science from the University of California, Berkeley, and was a postdoctoral scholar in the Center for Advanced Scientific Computing the Lawrence Livermore National Laboratory.

Student Speakers

|

Jaemin Choi, UofI Improving the Performance of Overdecomposed Applications on GPU-accelerated Systems (Technical Session Award) |

||

|

Invited Speaker – Ramchandran Muthukumar, JHU Randomized Sketching Algorithms for Low Memory Dynamic Optimization |

||

|

Thiago Teixeira, UofI A Language for Programming Optimization Spaces |

||

|

Charbel Sakr, UofI Finite Precision Deep Learning with Theoretical Guarantees |

||

|

Ameya Patil, UofI Boosted Spin Channel Networks for Energy-efficient Inference |

||

Contact

For additional details, feel free to contact the session chair, Cory Mikida.