Machine Learning and Signal Processing Session

9:00 am to 12:00 pm, February 23 in person at CSL B02

Advances in machine learning and signal processing have revolutionized capabilities in a variety of fields, with new innovations and applications emerging more and more frequently. The Machine Learning and Signal Processing session of the CSL student conference will cover a broad range of topics from theoretical machine learning to imaging and sensing. The session will include a keynote address from Professor Kangwook Lee from University of Wisconsin Madison, a prominent researcher working to develop better machine learning algorithms and systems, an invited talk from Robert Serafin, a PhD student at the University of Washington studying deep learning assisted 3D pathology, and four contributed talks from University of Illinois students.

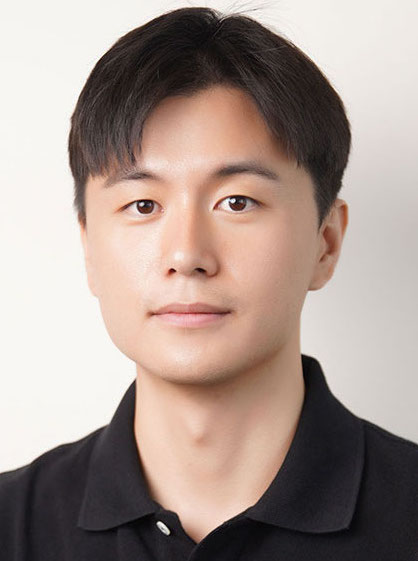

Keynote Speaker – Kangwook Lee, University of Wisconsin-Madison

“Score-based Generative Modeling Secretly Minimizes the Wasserstein Distance”

Talk Abstract: In my talk, I’ll present the findings from my recent research on score-based diffusion models. I’ll start with a gentle overview of these models, which are well known for DALL-E and stable diffusion models. I’ll then share my latest discovery that score-based diffusion models are secretly minimizing the Wasserstein distance between the generated and data distributions. I’ll explain the significance of this result and provide numerical evidence to support it. I’ll also briefly touch on ongoing projects, including our faster sampling algorithm and open problems. This presentation is based on the collaborative work with Dohyun Kwon and Ying Fan.

Biography: Kangwook is an Assistant Professor at the Electrical and Computer Engineering department and the Computer Sciences department (by courtesy) at University of Wisconsin-Madison. He also leads deep learning research at Krafton. Previously, he was a Research Assistant Professor at Information and Electronics Research Institute of KAIST. Before that, he was a postdoctoral scholar at the same institute. He received his PhD in May 2016 from the Electrical Engineering and Computer Science department at UC Berkeley and his Master of Science degree from the same department in December 2012. He received his Bachelor of Science degree in Electrical Engineering from KAIST in May 2010.

Invited Student Speaker – Rob Serafin, University of Washington

“Deep learning assisted 3D pathology for prostate cancer risk assessment”

Talk Abstract: Prostate cancer treatment decisions are heavily reliant upon the subjective visual interpretation (assigning Gleason patterns, or ISUP grade groups) of a limited number of 2D histology sections. Under this paradigm, inter-pathologist variance is high, with ISUP grades correlating poorly with individual patient outcomes. In contrast, non-destructive 3D pathology achieves orders-of-magnitude greater microscopic sampling than conventional slide-based histology. Further, it enables objective volumetric quantification of diagnostically significant microarchitectures. To explore the prognostic value of 3D pathology in prostate cancer we use open-top light-sheet (OTLS) microscopy to image simulated biopsies extracted from archived specimens from patients with known 5-year outcomes. However, since a single 3D pathology dataset can be tens to hundreds of gigabytes in size, manual examination of such data is tedious. Thus, there is a need for computational tools to facilitate clinical interpretation of 3D pathology data.

Given that 3D pathology is a nascent technology, our goal is to first identify interpretable 3D features extracted from tissue microstructures commonly used by pathologists for prostate cancer diagnosis and identify those which correlate with known patient outcomes. This approach requires first being able to identify structures of interest within large 3D images of whole biopsies. Here we present two deep learning-based segmentation methods used to investigate the prognostic significance of 3D microstructures (glands and nuclei) in our OTLS datasets. Prostate gland segmentations were generated using a method we developed called Image Translation Assisted Segmentation in 3D (ITAS3D). ITAS3D enables detection of molecular targets expressed within prostate glands without the need for antibody labeling. For nuclear segmentation, we adapted a previously published method (cellpose) for our 3D pathology datasets. Using a traditional machine learning classifier, we demonstrate that the 3D features of prostate cancer glands and nuclei are more strongly associated with patient outcomes than analogous 2D features, which could improve critical treatment decisions for prostate cancer patients in the future.

Biography: Rob Serafin is a 4th year student in the Mechanical Engineering PhD program at the University of Washington. His research focuses on developing computational methods for prognostic analysis of large 3D microscopy data of clinical specimens.

Student Speakers

Junyan He

“A deep learning energy-based method for classical elastoplasticity”

Moulik Choraria

“Learning Optimal Features via Partial Invariance”

Varun A. Kelkar

“AmbientFlow: Learning invertible generative models from noisy and incomplete imaging measurements”

Vlas Zyrianov

“Learning to Generate Realistic LiDAR Point Clouds”

CONTACT US

For more information, please contact the session chairs, Janet Sorrells (janetes2@illinois.edu) and Seiyun Shin (seiyuns2@illinois.edu).