Research

Indoor Environment Modeling

Indoor environments are essentially built for humans to afford humans’ daily activities. The interaction between humans and environments can help tell us the essential information of the environments. Modeling indoor environments has many practical applications such as intelligent surveillance systems, human-robotics interactions, and improved object detection and recognition. Ongoing work includes developing novel computational models and algorithms for indoor environment modeling.

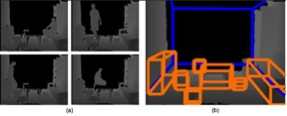

In our work, we exploit human-scene interactions to model indoor environment. As shown in Figure 1, from a person who is standing, we can roughly know where the floor plane is; from a person who is sitting, we can roughly know where the chair is. Based on these observations, we argue in this work that modelling indoor environments should fully exploit the cues provided by human-environment interaction.

Our approach extracts the spatial layout of a cluttered room and represents objects as 3-D cuboids in the environment from a depth video by exploiting information from human-environment interaction. (a) Four frames of an indoor environment depth video (the camera is stationary). (b) The modelling results.

We collect a new dataset (15 depth videos) for our experiments. Our approach achieves an average error rate of 21.34%, while the performance number of Baseline 1 (Environment modelling without human and object information) and Baseline 2 (Environment modelling using objects but without human information) are 21.34% and 29.68%, respectively.

Indoor Human Identification

Human identification at indoor environments is very important to build an intelligent indoor system. The goal of this research is to recognize human identify under unconstrained conditions. Ongoing work includes developing novel human signatures and features for indoor human identification.

There has been much work on gait recognition in the past years. Several benchmark datasets are also available for evaluation. However, to our best knowledge, all of the existing approaches (datasets) assume that people walk along a fixed direction in each video clip, which is impractical in many real world applications because people usually walk freely and the walking direction may be time-varying. In our work, we aim to identify people from uncontrolled gait, which means that people can walk freely along different directions, at different speeds, etc, in a single video sequence, as shown in Figure 2. To study this new problem, we collect a new dataset using the Microsoft Kinect depth sensor. Compared to the RGB cameras used by the existing datasets, the depth sensor can effectively preserve human appearance information, which makes gait recognition easier to be accepted by the public.

Five frames in a single unconstrained gait sequence of our dataset. From top to bottom are the original gait images, binary segmented silhouette images, and normalized and aligned silhouette images of one gait sequence, respectively.

We evaluate our approach on a newly collected dataset with 20 subjects. With one training video clip for each person, our method can achieve 87.6% rank-1 recognition rate, which is a promising performance number for such a hard problem. And the results also show our method significantly outperform several previous approaches.

Indoor Unsuccessful Activity Recognition

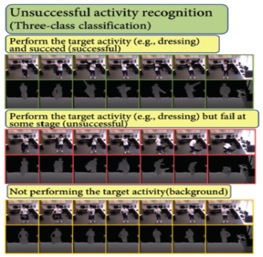

Intelligent rooms are useful for monitoring human behaviors for health care. We would like to know whether an activity is successfully completed or not. For example, a senior person in a nursing home tries to dress himself, but may fail at some stage (e.g., cannot raise his hands). If we have a sensor installed in the room, and this unsuccessful activity is recognized, then a nurse can be automatically alerted to help him. Recognizing unsuccessful activities is also extremely useful for helping patients with dementia, which is one of the most costly diseases. Patients with dementia tend to forget things because of deteriorated cognitive ability. As a result, they have to be fully taken care of by caregivers. If a computer system is able to recognize their unsuccessful activities, then an instructional voice reminder can be automatically played to remind (or guide) them. This will help them live independently and significantly reduce economic cost.

Three different examples when a person performs the activity in the scene: successful, unsuccessful and not performing the activity.

Interestingly, recognizing unsuccessful activities has never been studied before by computer vision researchers. We are the first to build a vision system to tackle this interesting and useful research problem. We developed a novel structural learning framework to detect unsuccessful activities. We build the first realistic dataset, which includes 12 daily living activities such as dressing, drinking, getting up, etc. We experimentally showed that our proposed algorithm achieves promising results on it (the accuracy is around 80%).