Home

For low-cost, high-quality and real-time Audio-Visual Telepresence

RATEM is a research project conducted at Advanced Digital Sciences Center (ADSC), as part of the Interactive Digital Media (IDM) sub-program. The PI leading our research efforts is Professor Douglas L. Jones from University of Illinois at Urbana-Champaign (UIUC).

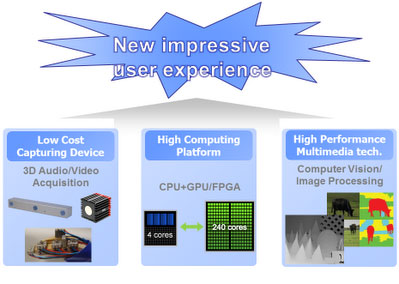

Low-cost, realistic, real-time, and flexible Audio-Visual telepresence is a fundamental goal of AV research at ADSC’s IDM program and is a grand challenge in itself, as acknowledged by the inclusion of virtual reality in the US National Academy of Engineering’s list of 14 grand challenges for engineering in the 21st century. Success in this endeavor will equate to natural and seamless communication between individuals through a wide variety of media. The ultimate goal is a system providing full 4D (3 spatial dimensions plus time) audio-visual remote reality. Among its many uses, such a system could revolutionize teleconferencing, augmented reality, and gaming by providing 1)true 4D sound and video perception and 2) the ability to synthesize a telepresence involving several sites by placing participants in a virtual scene and allowing them to interact naturally as if all of the participants were physically present. These effective interactions with friends, colleagues, and collaborators around the world has become central to success in business, government, as well as personal relationships. By greatly extending the capability and naturalness, the RATEM project contributes to the main vision of the IDM subprogram by enabling more frequent and less costly high-quality communication and interaction, with huge benefits to business and society.

In the following section, Goals of RATEM project within IDM program, Research challenge and the state of the art, Potential industrial impact and Research collaboration are described in more details.

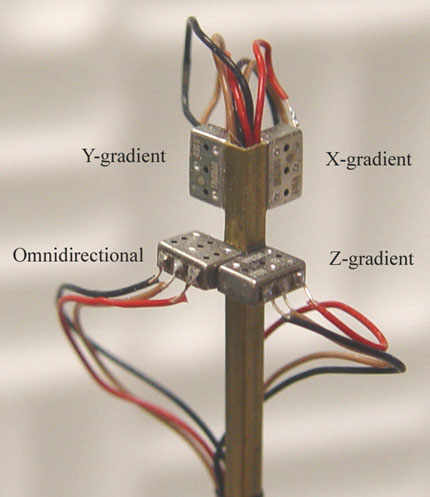

Our ‘Zero-Aperture’ Miniature Microphone Array

Goals of RATEM project within IDM program

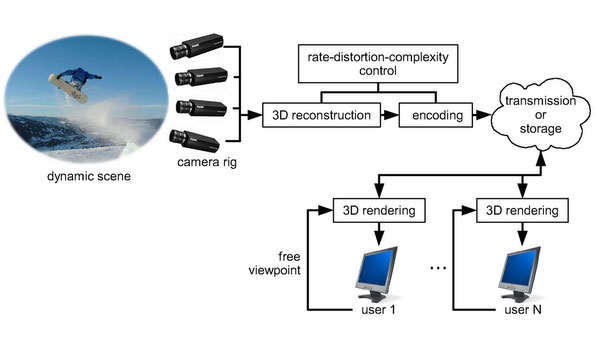

The Audio-Visual telepresence is to provide face-to-face communication, overcoming the distance between persons. An ideal Audio-Visual telepresence service should allow participants in distance places to communicate with each other in a natural environment, as if they were in the same room. RATEM project aims to research and develop realistic 3D audio solutions for low-cost, realistic, real-time, and flexible Audio-Visual telepresence. The corresponding realistic video solutions are being addressed in the ITEM project by video research group at ADSC.

Recently, most efforts are to advance the audio-visual technology for realistic telepresence service that produces a virtual environment where individuals can hear, see and feel a seemingly shared atmosphere. Normally, participants in meetings have no problem in listening to one particular speaker in the same room, even when several persons speak at the same time. This is due to the well-known cocktail party effect. However, in a remote teleconference the cocktail party effect is usually lost due to a monaural audio technique. Consequently, the remote listeners often have difficulty to discriminate what a particular speaker says among a mixture of conversations. Even for personal communication, a high-level background noise can significantly affect the speech intelligibility, thus the meeting efficiency. For example, suppose that Alice sits in a coffee shop while she attends a virtual meeting. The restaurant noise and other patrons’ voices will add with Alice’s speech, which will significantly affect the other attendees’ hearing perception. Further, human hearing is extremely sensitive to the perceived direction of arrival of sounds. If there are small differences between the expected and perceived directions of arrival, so that the voice of the colleague on Alice’s (virtual) left seems to be coming from another direction, the illusion of co-presence will be broken. Furthermore, in case a face-tracking camera is used with Alice and when Alice is moving out of sight of the camera or in a poor light place where the face detection method may not function properly, the acoustic direction finding may give help to steer the camera’s focus on Alice’s face. Thus, the achievements of clean capture of acoustic sources, accurate estimation of the direction of arrival of speakers, and appropriate reconstruction of their virtual direction for realistic audio display are critical needs for immersive communications. Therefore, it is driving the demand for better realistic 3D (three-dimensional) audio technologies.

Towards our vision of building a realistic and cost-effective Audio-Visual telepresence, we are addressing the following fundamental but challenging aspects:

- 3D audio direction-finding: developing theory and method for the direction finding of speakers and other interested sound sources in 3D space and in real-time using small aperture array and small number of microphones;

- Directional voice acquisition and noise reduction: investigating robust methods for picking up the target speech only when interferences appear;

- Binaural 3D audio reconstruction: Capturing 3D spatial audio information using low-cost microphone array and reconstructing the 3D spatial audio effects by headphone or louder speakers.

Research challenge and the state of the art

-Can computers be smarter than humans?

It is easy for people to tell approximately which direction a sound is coming from, but it is hard for a computer to do so. The humans have just two ears, but can locate sounds in three dimensions (in direction above and below, in front and to the rear, as well as to either side) and in range (distance). This is done by a co-work of human brain, the inner ear and the external ears. It is known that humans estimate the location of a sound source by taking a biological neural determination process which is still not fully understood by scientists till now. Since the neural determination of source location in human brain cannot be easily duplicated on computers, even the sound signals received at the two ears are available; determination of the source location like humans using computers is still very hard if possible. To mimic the localization ability of humans as well as other natural creatures using computers, sound source localization has been a classic problem in array signal processing, and extensively studied with arrays of physically separated microphones. Although it is very easy for humans, even a huge array of microphones is adopted, no known algorithm can do a decent job of localizing a sound source in real-time with appearing room echoes, reverberations, and background noise. Further, more challenge will be added when multiple sound sources of broadband frequencies are considered.

-Can the Cocktail Party effect be preserved?

The cocktail party effect describes the ability to focus ones’ listening attention on a single talker among a mixture of conversations and background noises, ignoring other conversations [1]. The effect enables most people to talk in a noisy and crowded party. However, if a noisy and crowded party has remote participant through a current telepresencing system it will be difficult for the remote participant to focus on a single talker due to the loss of the cocktail party effect through a monaural audio technique. One of the most useful effects is to give the remote listener a good sound image localization of the cocktail party by re-establishing the realistic sound field. There are two common approaches of reproducing the spatial sound field that have been extensively studied. One approach is to reconstruct the desired sound field in the vicinity of each ear of the listener, and another is to construct the entire sound field of a particular room or environment. Even a large microphone array and large number of louder speakers are used, no known system can do a decent job of capturing and reproducing a realistic 3D spatial sound field, in spite of a very big budget allowed in the system implementation. Alternatively, if a method can be used to retrieve the particular talker’s speech in a cocktail party it will significantly increase the efficiency of remote immersive communication. However, the effects of the current blind speech separation, the adaptive beamforming, and the echo cancellation techniques are far below the expected. Therefore, capturing and reconstructing a realistic 3D sound field and clean capture of a target speech in a cocktail party is still a challenging task.

-Low cost, effective and real time…

Realistic teleimmersion will never be widely deployed if each user must have a large physical array of microphones, large number of louder speakers, and expensive colour and depth camera as well as unlimited bandwidth. Any restriction on the cost and portability of such resources will ultimately increase the technical challenges and add more difficulty of maintaining a user’s friendly experience.

Given the many challenges that must be addressed in terms of audio, it is not surprising that state-of-the-art teleimmersive systems and undergoing projects, such as the HD Telepresence and Video marketed by Polycom [2] and the BeingThere Center’s projects in NTU [3] have still been limited to the visual sense. Some improvements in the audio realm have included wide-band audio and acoustic echo cancellation technologies, which still lacks the illusion of being there due to the missing in the sense of a realistic 3D spatial audio environment.

Potential industrial impact

To target the goals as stated above, RATEM project aims to develop low-cost, small size sensors, theory and practical approaches, and processing efficiency improvements that improve sensing capability reducing the sensor size; allowing accurate direction finding of multiple speakers; robustness to environmental effects such as reverberation, background noise, and interference; reduced deployment and calibration effort; target speech extraction and enhancement of signals; and appropriate reproduction of a realistic 3D acoustic environment.

Singapore is one of the leading countries in electronics manufacturing. This technical lead allows great opportunity to fabricate and market the novel array sensors. The acoustic sound direction finding technique will have direct impact on the technological capabilities of numerous fields. Besides the enhanced immersive telepresencing, accurate direction finding allows improved sensing, monitoring and response by many systems that directly use audio sensing, and improved cost and quality of other systems that can use accurate direction finding as a control input. Thus, acoustic sound direction finding will have the impact in multiple application areas including but not limited to:

- Enhanced computer web-camera focus tracking – automatically change camera direction and focus to follow audio source in the camera’s field of vision

- Improved live-action entertainment surround-sound recording – record audio and direction to allow home users to have high-quality, live action reproduction of 3D audio environment

- Commercial surveillance – audio surveillance for automated incident tracking in large, crowded public environments such as malls, stadiums, and large public spaces

- Improved robots’ spatial awareness and response to user interaction

These applications will directly employ the enhancements developed for this project.

Research collaboration

It has been pointed out that the direction finding technology not only benefits the virtual reality for enhanced immersive teleconferencing and computer web-camera focus tracking, but also shows potential impact in numerous fields like commercial audio surveillance for automated incident tracking in large, crowded public environment, remote battlefield surveillance, situation awareness, security monitoring and robotics audition etc. All these applications call for a robust real-time 3D direction finding system. Strong external interest has been expressed from Defense Sciences Organization (DSO) of Singapore. In order to realize our vision, we are closely collaborating with other research institutes, universities, and companies. Several collaborations have been made:

- Collaboration with Prof Gan Woon Seng from DSP Lab@NTU on 3D audio capture and reproduction for an immersive 3D audio capture and playback system,

- Collaboration with the Institute for Infocomm Research (I2R), A*STAR on the robot pet project for the heath care of the elderly.

- Collabration with NEC Singapore, ZWEEC Analytics Pte Ltd, iOmniscient Australia for the Safety and Security Industrial Programme Office (SSIPO) Safe City Testbed.

[1] Bronkhorst, Adelbert W. (2000). “The Cocktail Party Phenomenon: A Review on Speech Intelligibility in Multiple-Talker Conditions“ (pdf). Acta Acustica united with Acustica 86: 117–128.

[2] Polycom’s HD Telepresence and Video products:http://www.polycom.com/products/hd_telepresence_video/index.html

[3] NTU, BeingThere Center’s Research:http://imi.ntu.edu.sg/BeingThereCentre/AboutBeingThereCentre/Pages/VisionandObjective.aspx