Introduction

Neural networks are considered by some to be one of the most promising technologies for solving perception problems in autonomous driving. In this MP, we will train a neural network for classifying traffic signs using PyTorch.

Step 1. Download the dataset

The GTSRB dataset can be downloaded from here

For training set go to:

Training dataset->

Images and annotations (263MB)

For test set go to:

Test dataset (online-competition only!) ->

Sorted test set (i.e., same directory and filename structure as the training set, elements are sorted by class and track) ->

Images and annotations (84MB)

The code used for the in-class PyTorch tutorial is here.

Step 2. Train a NN using Pytorch

The main task here is to choose the structure and parameters of the Neural Networks and understand the basic workflow of training a network. You will ultimately have to submit a Jupyter notebook, but you can also start working in Python alone.

- Just like before, we need to download the Jupyter file from GitLab:

git clone https://gitlab.engr.illinois.edu/GolfCar/mp2-release

- Extract the downloaded data set into the same folder that you just cloned from GitLab.

- Install PyTorch

![]() PyTorch can be downloaded from the official website.

PyTorch can be downloaded from the official website.

Choose the proper version based on your system.

- Open the Jupyter notebook

jupyter notebook

- Go to the folder where you do the git clone command. Now you should be able to develop your code on Jupyter notebook.

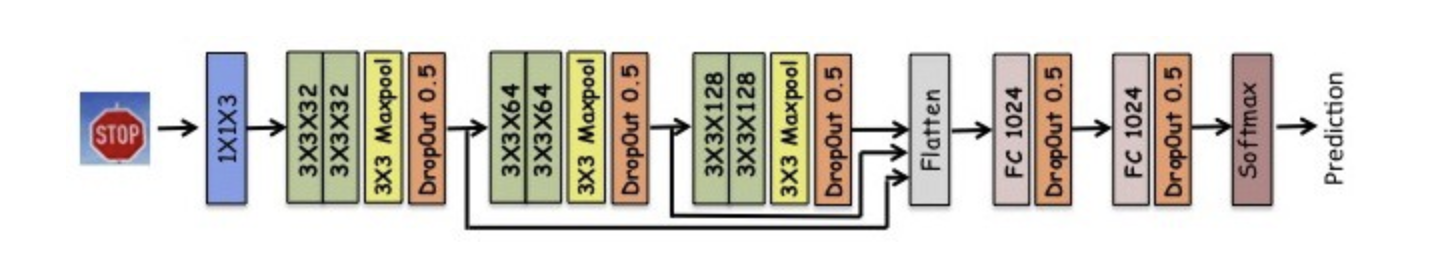

- To begin with, you can try the NN model included in the tutorial Jupyter notebook file. That is not very likely to give you a good result, but you can quickly verify that the code is working and the data are loaded properly. Here is another relatively easy-to-implement example with 98% accuracy after data augmentation. The CNN structure proposed by this article is:

If you want to have very good accuracy, here are some links to state-of-the-art papers:

If you want to have very good accuracy, here are some links to state-of-the-art papers:

Haloi, M. (2016, July 17). Traffic Sign Classification Using Deep Inception Based Convolutional Networks. Retrieved February 11, 2019, from https://arxiv.org/abs/1511.02992 - Training a neural network like these might take 20-60 mins, depending on your machine and model structure. Do start on this MP early.

Step 3. Augment the training data

To improve performance of our CNN, we need to sometimes augment the training dataset. That is, add new training data to classes with high error or too few training data. In this part of the exercise, you are required to perform data augmentation on at least one of the classes in the training dataset. You can try this on more classes if you like.

There are several ways of adding training data, for example, we can change the orientation, flip, scale, change saturation or brightness. Choose one of these techniques or some combination thereof. For the selected class(es), use openCV functions to apply this transformation to all the images in the training set and generate a new set of training images. Perform the training again on this new augmented dataset (old images + transformed images) and report your results. If you are having problem with ppm format, you can convert the images to other image format using pillow package:

Repeat the above with two other transformations (methods) and report the performance results. Of course, when you augment data using method 2, you will have to manually remove the data you had previously generated using method 1 from the Pytorch folder.

Step 4. Break your trained network with adversarial inputs

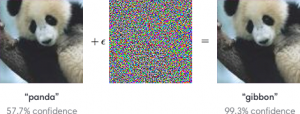

It is well-known that NN classifiers are not robust to perturbations. See this article for a quick explainer. That is, small changes in an input image can cause it to be mis-classified. In this part of the MP, you have to work with your already trained NN to find the most egregious adversarial input.

Picture from this OpenAI article on adversarial inputs.

Find the smallest amount of salt and pepper (recall from lecture 2) noise that has to be added to any image in the data set that was classified correctly, for the image with noise to be miss-classified. Write a function to find this smallest noise for mis-classification, for each image class. Then explore how the smallest noise changes across the different classes. Perform the same experiment with gaussian noise instead of salt and pepper noise. What conclusions can you draw about robustness of your classifier from these experiments?

Step 5. Report

You are expected to write a report (at least 3 pages), including:

- A brief explanation for the structure of your neural network models. You need to try at least three different models.

- Compare the results of your different models.

- Compare the accuracy of your NN classifier in different classes and try to explain why some classes have lower accuracies.

- Describe at least three techniques you used to preprocess the training set (AKA data augmentation), such as flip the image, change brightness, etc. Did they work? Why? Just write down what you think. You don’t have to prove anything.

- Answer the questions in the Adversary part of this webpage.

- If you implemented any advanced techniques, please also include them in the report.

- Citation if needed.

- If you find any typo or you rewrite the code structure to improve performance, please explain in the report. You may get extra credits in your final grade based on your contribution.

Submission

You need to submit the finished Jupyter file (.ipynb) with the results, the trained weights (MP2weights.pth) and the report (pdf) to Compass 2g. Please submit a zip file with name “netid1_netid2_mp2”. Failing to fulfill these requirements may effect your grade.

The deadline is on February 27 at 11:59PM.

Grading Rubric

You are supposed to work with your project teammate. Both of you will get the same grade.

- 30% on accuracy. You will get full points if you got >=95% accuracy, every 1% lower accuracy will take away 1% until 0. We will test your CNN using the whole test set. Make sure include the trained weights in your submission, or you will get 0 for this part.

- 30% on explanation on your models. You need to try at least 3 different structures and choose the best one. Describe the CNN structures and compare the results in your report.

- 20% on data augmentation.

- 20% on adversary task.