Home

This project is conducted at Advanced Digital Sciences Center (ADSC), as part of the Interactive Digital Media (IDM) sub-program. The PI leading our research effort is Dr. Yi Ma from the University of Illinois at Urbana-Champaign and MSRA.

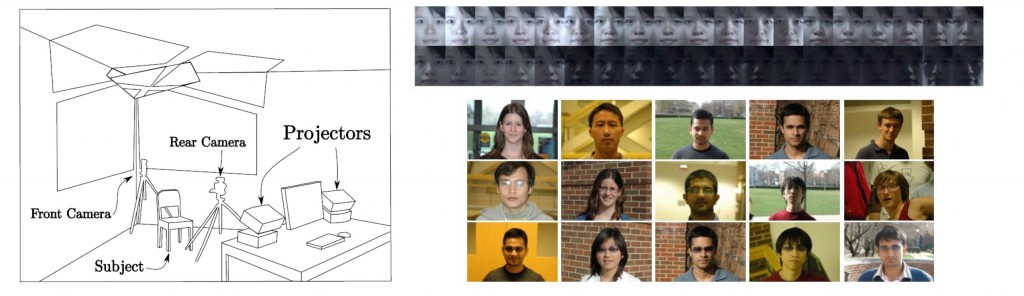

[Left plot] Training acquisition system: Four projectors and two cameras controlled by one computer. [Top-right plot] 38 traning images of one subject captured by the training acquisition system. [Bottom-right plot] Representative testing examples, including indoor/outdoor images (1st row), images of subjects with eyeglasses (2nd row), and image of subjects with sunglasses (3rd row).

Goal:

The goal of this project is to investigate and develop principled computational methods for highly effective, scalable, and robust object recognition systems. The objects of interest to this project range from objects with specific identities such as human faces, (Chinese) characters, and city landmarks to more loosely defined object classes such as automobiles, pedestrians, or trees. Such object recognition systems are urgently needed by a broad range of modern applications: augmented reality; indexing, ranking, and searching of massive images and videos over the Internet; and security surveillance.

Relation to IDM:

ADSC’s interative digital media (IDM) seeks to incorporate audio, video, and unstructured web data to provide a genuine human sixth sense in augmented reality and related applications. Object/face recognition is a key capability for supporting this sixth sense. For example, an augmented reality system must recognize each object that it will provide additional information about. As another example, reliable face recognition can enable personalization of the environment in a smart office or home, from customized heating and cooling settings to automated toddler locks for kitchen appliances and reminders of upcoming appointments at the office. ADSC’s integrative applications for virtual meetings and smart offices leverage this ability to recognize people and other objects. For example, when a virtual meeting system recognizes a particular person at a meeting, the entity search facilities of the ARISE project can find, disambiguate, reconcile, and summarize key information about that person, and make that information available to other attendees.

Challenges:

Such exciting applications as augmented reality also pose tremendous challenges for the research on object recognition. Many of the existing object recognition techniques are still far from meeting the performance demand of many real-world applications. For instance, existing commercial face recognition software do not yet meet the high performance requirements of security systems, and have resulted in several high-profile failures when their application domain gets stretched into security. Also, most of the commercial text recognition systems or barcode readers require very precise alignment of the image orientation and often cannot tolerate any corruption in the input. In fact, the performance of almost all commercial image-based object recognition systems degrades rapidly whenever the input image is different from the training data in the following aspects:

- Taken under significantly different lighting conditions;

The illumination variations among images of the same face are larger than image changes in face identity [1]. Chen et al. [2] illustrated this complexity by showing that there is no discriminative illumination invariant for Lambertian objects under distant point light sources; i.e., it is not possible to determine whether two images were created by the same object under two distant point light sources or by different objects. Hence, given a test image of an object, it is difficult to predict anything definite about this object or how it will appear under different lighting conditions. While the test image can be expressed by a linear combination of an appropriate set of training images [3], [4], the test image taken under significantly different lighting conditions may not be well represented by the training images.

- Partially occluded or corrupted;

Partial occlusion poses a significant obstacle to robust real-world face recognition [5], [6], [7]. Handling occlusion is difficult mainly due to the unpredictable nature of the errors: it may affect any part of the image and may be arbitrarily large in magnitude. A face recognition system can confront occluded faces in real world applications very often due to use of accessories, such as scarf or sunglasses, hands on the face, the objects that persons carry, and external sources that partially occlude the camera view. Therefore, the face recognition system has to be robust to occlusion in order to guarantee reliable real-world operation.

- With a significant pose or viewpoint change.

Another major challenge encountered by current face recognition techniques lies in the difficulties of handling varying poses, i.e., recognition of faces in arbitrary in-depth rotations. The face image differences caused by rotations are often larger than the inter-person differences used in distinguishing identities. Face recognition across pose, on the other hand, has great potentials in many applications dealing with uncooperative subjects, in which the full power of face recognition being a passive biometric technique can be implemented and utilised. A long line of research exists on using Active Appearance Models [8], and the closely related Active Shape Models [9] to register images against a relatively high-dimensional model of plausible face appearances, often leveraging face specific contours. While these model-based techniques have advantages in dealing with variations in expression and pose, they may add unnecessary complexity to applications where subjects normally present a neutral face or only have moderate expression.

In recent years, from the lessons learned from face recognition, people have come to realize that in order for any object recognition system to function reliably under real-world conditions, it has to be made robust to at least the above three factors. Precisely, the goal of this project is to systematically study and develop new computational methods that can harness all the information encoded in the entire object image (instead of a small set of features) and make object recognition truly robust to illumination, occlusion, and pose variation. We call this a “holistic approach” to object recognition.

References:

[1] Y. Adini, Y. Moses, and S. Ullman, “Face recognition: The problem of compensating for changes in illumination direction,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 19, no. 7, pp. 721-732, July 1997.

[2] H. Chen, P. Belhumeur, and D. Jacobs, “In search of illumination invariants,” in Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recog., 2000, vol 1, pp. 254-261.

[3] P. Belhumeur and G. Hager, “Tracking in 3D: Image variability decomposition for recovering object pose and illumination,” Pattern Analysis and Applications, vol. 2, pp. 82–91, 1999.

[4] H. Murase and S. Nayar, “Visual learning and recognition of 3D objects from appearance,” International Journal of Computer Vision, vol. 14, pp. 5–24, 1995.

[5] A. Martinez, “Recognizing imprecisely localized, partially occluded, and expression variant faces from a single sample per class,” IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 24, no. 6, pp. 748-763, June 2002.

[6] A. Leonardis and H. Bischof, “Robust recognition using Eigenimages,” Computer Vision and Image Understanding, vol. 78, no. 1, pp. 99-118, 2000.

[7] F. Sanja, D. Skocaj, and A. Leonardis, “Combining reconstructive and discriminative subspace methods for robust classification and regression by subsampling,” IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 28, no. 3, Mar. 2006.

[8] T. Cootes, G. Edwards, and C. Taylor, “Active appearance models,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 23, no. 6, pp. 681–685, 2001.

[9] T. Cootes and C. Taylor, “Active shape models – ‘smart snakes’,” in Proceedings of British Machine Vision Conference, 1992.

Impact:

Except for the applications in augmented reality, object recognition has become the core to many new consumer products and commercial services over the Internet or cellular network. For instance, it can be applied to adult content filtering in the web, traffic surveilance, security access control, visual geolocalization, visio-conferences or intelligent man-machine communication. Moreover, object recognition can be marked as representative killer applications for future cloud computing by IT giants like Google, Microsoft, and Apple. As all these companies have started to realize, effective object recognition methods could bring many disruptive technologies that can fundamentally change public safety, human computer interface, social networking and hence bring tremendous new business models and opportunities.

Representative examples of objects for recognition: human faces, texts, bar codes, and landmarks.

Although we have listed only a few examples above, people can already get a sense of the broad range of exciting applications and business opportunities that object recognition can potentially bring (or has already brought). These applications would significantly enhance our ability in recognizing new things around us, effectively making the entire Internet as our backup memory and knowledge base. This obviously will fundamentally alter how we will interact with the environment, with other people, and with machines in the future.