Contributions

Real-time, robust video object cutout

As shown in Figure 1, ITEM has created and demonstrated a video object cutout technique that automatically segments a foreground layer from a live VGA or HD video stream in real time, using only a single webcam and a commodity desktop or laptop CPU [Lu11a]. Grounded on a solid inference basis, the cutout algorithm probabilistically fuses different cues together into a unified optimization framework. The cutout approach includes many novel and robust design choices, which are key to achieving the high accuracy and good performance shown in the prototype implementation of the cutout algorithm. Further, the overall solution framework and some of its key components are applicable for real-time video object cutout with stereo cameras or depth cameras.

ITEM’s new approach to video object cutout advances the state of the art for this classical computer vision problem in three major ways.

- Reliable segmentation with a single webcam: ITEM’s approach works reliably with a single webcam, yet produces accurate results whose quality is comparable to those obtained with more costly or complex setups, such as stereo cameras, depth sensors, and flash and no-flash image pairs. As shown in Figure 2, ITEM’s approach is robust against intensity and color variations in the subject’s appearance and a variety of background changes present in real-life applications, all of which previous methods find very challenging to handle: background and foreground with similar colors, dramatic movements in the foreground, a non-stationary background with moving objects, illumination changes, and shaky cameras.

- Real-time performance: ITEM’s cutout approach extracts foreground objects at unprecedented speeds on an ordinary PC, e.g., processing 18 frames per second at a 1280×960 resolution on a mainstream laptop CPU, with a CPU load of less than 40%. This contrasts with previous methods, which process even 160×120 or 320×240 low-resolution videos at only 7-15 frames per second, or entirely offline.

-

Ease of use: ITEM’s cutout approach requires simple and minimal user participation during system initialization, and is completely automated thereafter. These advantages make ITEM’s cutout approach appealing and practical for wide application in video conferencing, e-learning, and virtual reality.

View our video to see a demonstration of this research.

Lightweight teleimmersive multiparty video chat system

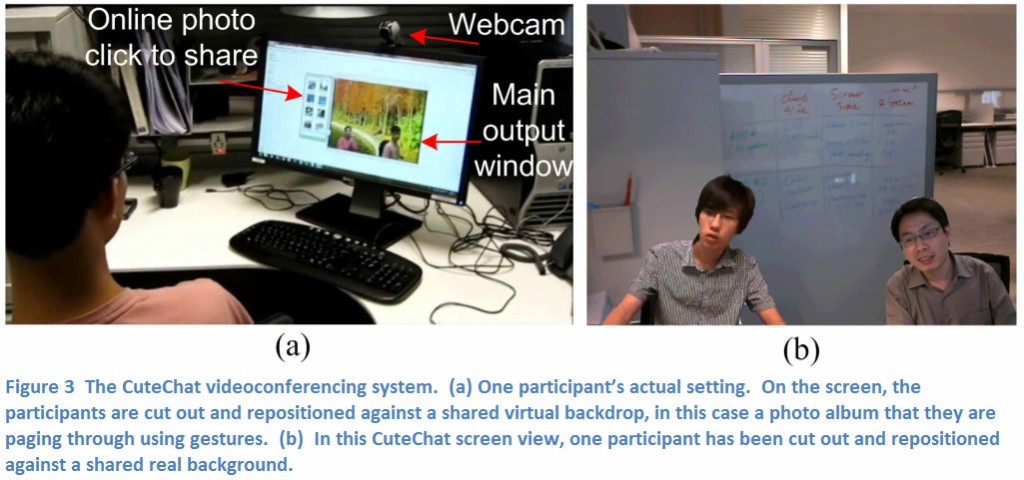

ITEM has created and demonstrated a practical, lightweight teleimmersive video chat system with truly minimal equipment requirements: any webcam, PC, and the public Internet. Figure 3 shows a screen shot from this system, called CuteChat [LNNS11]. CuteChat leverages ITEM’s new video object cutout technology to provide a radically new video chat experience by merging two or more video conferencing participants onto a shared background environment. With the addition of a depth camera, CuteChat employs gesture recognition to allow the participants to interact with each other or with the selected background content in a natural and integrated manner, such as paging through presentation slides or online photo albums with a wave of the arm. With the goal of making the system easily accessible to the typical consumer, CuteChat addresses network bandwidth challenges in addition to the need for single-webcam real-time video object cutout discussed above. To provide low-latency video communication over the public internet, CuteChat employs novel techniques for object-based video coding, which reduces bandwidth requirements and speeds up the video coding process, both by a factor of 3 to 4.

The current CuteChat implementation performs bilateral, Internet-based immersive video conferencing between two or more remote users, with VGA-sized (640×480) video object cutout, object-based encoding and decoding, and immersive video composition, all in real time on a commodity laptop or desktop PC. CuteChat clearly demonstrates unique advantages over current state-of-the-art teleimmersive systems, which, as discussed earlier, are typically bulky, require high Internet bandwidth, and are too computationally intensive to run on an ordinary PC. In addition, CuteChat is easier to use than previous systems, and provides better video quality under most conditions. ITEM has also created methods that allow users to perfect and beautify the appearance of their skin, or to have objects outlined in black and rendered in a limited range of colors, providing a cartoon-like or paint-by-numbers appearance. These facilities, called QuickToon [Lu11b], are incorporated into the CuteChat system.

View our video to see a demonstration of this research.

Color-guided depth video enhancement

Depth cameras became popular in computer vision research as soon as Microsoft released the low-cost Kinect. Their appeal stems from the fact that a depth camera directly reports the distance of objects from the camera, while it is difficult, time-consuming, and error-prone to estimate that same depth information using conventional or even stereo cameras. However, Kinects have problems that limit their applicability in many useful situations: a limited range of focus, low resolution, and significant noise under certain lighting conditions and object colors. On the other hand, conventional cameras can operate quite well in many of the situations that challenge a Kinect. Thus employing the two together has the potential to harness the strengths of each.ITEM has addressed the challenge of improving the quality of depth video by creating novel techniques to increase its resolution and suppress noise [MLD11a, LMPD11]. Our first technique uses information from a conventional camera co-located with the depth camera. We use color information from the conventional camera to help pinpoint inaccuracies in the depth video. More precisely, given a high-resolution color video and its corresponding low-quality depth video, ITEM’s approach employs a weighted mode filtering method that is based on a joint histogram. We have shown that the proposed approach provides the optimal solution with respect to L1 norm minimization, making it superior to all the existing methods in terms of edge-preserving performance. It is particularly important to preserve edges because they delineate object boundaries or other important features that are critical for understanding the content of an image. More specifically, we have shown through extensive experiments using standard test sets of depth maps that the error rate of the depth map enhanced by the proposed approach is only about half that of state-of-the-art methods, especially for challenging depth discontinuities (e.g., 20.2% vs. 40.4% error rate). Further, ITEM’s approach is up to two orders of magnitude faster than state-of-the-art methods on CPUs, and is always at least a factor of two faster. It can be made even faster with a GPU, because its algorithm is highly parallelizable thanks to its pixel-wise independent processing. ITEM’s current implementation runs at 30 frames per second for VGA-sized Kinect depth maps on a GPU, which has never been achieved by previous state-of-the-art methods. While the speedups to date are impressive, there may be theoretical limits on how far one can go in this direction, as exemplified by ITEM’s recent work on how much one can speed up the analysis of video from stereo cameras [MLD11b].

Our second technique exploits the fact that depth information should not change drastically between neighboring frames of a video, i.e., there should be temporal consistency. This temporal dimension has not been extensively exploited in existing methods. To provide temporally-consistent estimates on depth video, we extend the color-based method described above into temporally neighboring frames. We use simple optical flow estimation and a patch similarity measure to improve the depth video quality efficiently. The resulting approach has outstanding performance and is very efficient, compared with existing methods. Further, temporally-consistent enhancement of depth video reduces flickering while improving the accuracy of depth video. For instance, using the aforementioned weighted mode filtering method alone, we have determined that the error rate of the temporally-consistent estimate is only about 50% of that of the single-frame depth enhancement (e.g., 15% vs. 30%). Also, the temporally-consistent estimate imposes insignificant additional overhead at run time.

View-synthesis-driven depth video compression

Immersive telepresence requires us to be able to understand a scene sufficiently well to synthesize a view of it from a different angle, so that, for example, Alice sees a side view of Bob when he is seated next to her. Depth information is crucial for this task, so using a depth camera together with a conventional camera can potentially greatly reduce the computational complexity of many computer vision tasks. Yet depth camera data transmission will eat up precious network bandwidth, which is already in short supply just for conventional video. Depth video can be compressed to reduce the bandwidth requirements. Unfortunately, compression of depth information using conventional video coding standards often introduces serious coding artifacts at object boundaries, due to abrupt signal changes at those edges. Yet for many applications, those object boundary edges are precisely the information we want to obtain from the depth camera, and so introducing noise at those locations during compression will severely limit the effectiveness of using depth video.ITEM has addressed this problem through designing efficient techniques to compress depth video, by exploiting the high correlation between the color and the depth signal. Based on the textureless characteristics of depth maps, we encode depth video at a reduced spatial resolution and depth bits per sample, for significant bit rate savings. We use a novel post-processing method to efficiently reconstruct the depth signal at the original resolution after the decoding process, to preserve critical edge information. In addition, we have created an efficient denoising filter, based on weighted mode filtering inside the encoder, to suppress coding artifacts. We have applied these techniques to the problem of efficient compression of data coming from a depth camera plus a registered color camera, which is an appropriate basic unit for an efficient data representation for 3D video [NMD11]. In practice, our compression achieves a bit rate savings of approximately 63% while simultaneously providing a subjectively better quality synthesized view, compared to state-of-the-art techniques.

References

[LMPD11] J. Lu, D. Min, R. S. Pahwa, and M. N. Do, “A revisit to MRF-based depth map super-resolution and enhancement,” in Proc. IEEE Int. Conf. Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech, May 2011, pp. 985-988.

[LNNS11] J. Lu, V. A. Nguyen, Z. Niu, B. Singh, Z. Luo, M. Do, “CuteChat: A Lightweight Tele-immersive Video Chat System”, ACM Conference on Multimedia, Scottsdale, Arizona, 2011.

[Lu11a] J. Lu, “REFOCUS: Robust and Efficient Foreground Object Cutout Using a Single Webcam”, A*STAR Technology Disclosure, March 2011.

[Lu11b] J. Lu, “QuickToon: Real-time video abstraction and enhancement on general processors”, A*STAR Technology Disclosure, April 2011.

[MLD11a] D. Min, J. Lu, and M. N. Do, “Depth Video Enhancement Based on Joint Global Mode Filtering,” accepted and to appear in IEEE Transactions on Image Processing, May 2011.

[MLD11b] D. Min, J. Lu, and M. N. Do, “A Revisit to Cost Aggregation in Stereo Matching: How Far Can We Reduce Its Computational Redundancy?”, IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, Nov. 2011.

[NAE11] National Academy of Engineering, Engineering Challenges for the 21st Century, http://www.engineeringchallenges.org/, accessed 24 September 2011.

[NMD11] V. A. Nguyen, D. Min, M. N. Do, “Efficient Techniques for Depth Video Compression Using Weighted Mode Filtering”, submitted for publication.

[NTII11] National Tele-Immersion Initiative, http://www.advanced.org/teleimmersion2.html, accessed 24 September 2011.

[TEEVE11] TEEVE: Tele-immersive Environment for Everybody, http://cairo.cs.uiuc.edu/projects/teleimmersion/ , accessed 24 September 2011.

[LNNS11] J. Lu, V. A. Nguyen, Z. Niu, B. Singh, Z. Luo, M. Do, “CuteChat: A Lightweight Tele-immersive Video Chat System”, ACM Conference on Multimedia, Scottsdale, Arizona, 2011.

[Lu11a] J. Lu, “REFOCUS: Robust and Efficient Foreground Object Cutout Using a Single Webcam”, A*STAR Technology Disclosure, March 2011.

[Lu11b] J. Lu, “QuickToon: Real-time video abstraction and enhancement on general processors”, A*STAR Technology Disclosure, April 2011.

[MLD11a] D. Min, J. Lu, and M. N. Do, “Depth Video Enhancement Based on Joint Global Mode Filtering,” accepted and to appear in IEEE Transactions on Image Processing, May 2011.

[MLD11b] D. Min, J. Lu, and M. N. Do, “A Revisit to Cost Aggregation in Stereo Matching: How Far Can We Reduce Its Computational Redundancy?”, IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, Nov. 2011.

[NAE11] National Academy of Engineering, Engineering Challenges for the 21st Century, http://www.engineeringchallenges.org/, accessed 24 September 2011.

[NMD11] V. A. Nguyen, D. Min, M. N. Do, “Efficient Techniques for Depth Video Compression Using Weighted Mode Filtering”, submitted for publication.

[NTII11] National Tele-Immersion Initiative, http://www.advanced.org/teleimmersion2.html, accessed 24 September 2011.

[TEEVE11] TEEVE: Tele-immersive Environment for Everybody, http://cairo.cs.uiuc.edu/projects/teleimmersion/ , accessed 24 September 2011.