Home

Project Overview and Plan

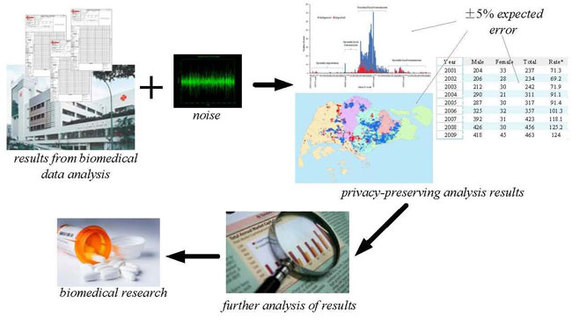

Privacy has been a growing concern in the era of big data, and several major privacy breaches have occurred in the past few years. In 2006, AOL released an anonymized dataset containing the search records of its users, with all direct personal identifiers removed. Soon, reporters were able to re-identify an individual, by cross referencing these records with phonebook listings.Similarly, in 2009, researchers found that a considerable portion of users can be identified from the movie recommendation dataset released in a Netflix data mining competition, by combining the data with the comments retrieved from the Internet Movie Database. Both cases highlight the importance of privacy preserving technology. One important area where privacy-preserving technology is particularly important medical research. The movement towards electronic medical records brings the opportunity for creating vast repositories of patient data that can be mined to dramatically accelerate the rate of progress in medical research. However, privacy concerns make it is infeasible to provide researchers with unfettered access to medical records. Differential privacy promises a practical resolution to this conflict. To preserve differential privacy, the contributions of any one individual to the answer of any question must be negligible, in a precise mathematical sense. It is intuitive that this preserves people’s privacy, since the query results given to researchers are essentially the same, whether or not a particular person’s data is included in the database. Differential privacy, then, creates the tantalizing possibility of privacy-preserving data analysis that is both useful and provably secure. Our goal in this project is to take the theoretical concept of differential privacy and turn it into a set of practical techniques that can be used for the most common types of mining tasks for sensitive medical data.

To achieve this goal, the project team includes biomedical researchers from the Genome Institute of Singapore and from NUHS/NUS, along with data mining and security experts from ADSC, I2R, and NTU. The overall plan was for the biomedical researchers to identify the types of analyses where they most wanted to be able to obtain differentially private results, along with the accuracy needed for those results in order for them to be useful. Then the computer scientists would devise a differentially private version of each identified type of analysis, and validate the quality of the results using data provided by the biomedical researchers.

Damson at ADSC

Research Challenges

We have identified two fundamental challenges in the application of the differential privacy technology.

- For the most common types of biomedical analyses, how can we minimize the noise added / maximize the utility of the analysis results?

- The privacy budget is a parameter chosen by the data owner that controls the hardness for an attacker to infer sensitive information from the published dataset. Each analysis uses up some of the “privacy budget”. How can we make the budget last as long as possible?

Research Contributions

By working with the biomedical collaborators and studying the reports released by the Ministry of Health, the computer scientists on the team identified a rather large set of types of statistical analyses that are popular and important in the biomedical community. As planned, the creation of differentially private versions of these analysis techniques became the main target of our research. Example analyses include:

- Data cubes for data aggregation

- Histograms

- K-means clustering (using genetic algorithms)

- SVM classification (using genetic algorithms)

- GWAS studies

- Linear regression

- Logistic regression (using genetic algorithms)

- Synthetic differentially private datasets

- Cox regression

- Weibull regression

The project also deals with the higher-level problem that each use of the same data in a different differentially private analysis will eat away a little more of the strength of the privacy promise for that data. We have designed two techniques to address this problem.

Along the way, we have learned that the broader medical community and the public does not believe that de-identified biomedical records can relatively easily be re-identified. This raised the risk that our project might produce excellent techniques to help preserve privacy, but find no uptake of the techniques in the biomedical community. Thus we have expended some effort on debunking this belief, by demonstrating how easily de-identified records can be re-identified using statistical techniques and easily available background information. More precisely, we have performed statistical analyses to clarify the risk of reidentification of:

- The published results of GWAS studies

- De-identified medical records

- “Anonymized” social network data, which is relevant for study of the spread of epidemics

We created a technique for making certain types of data sets differentially private and publishing them, rather than publishing only the results of differentially private analyses over the data:

Publications

- J. Zhang, X. Xiao, Y. Yang, Z. Zhang and M. Winslett. PrivGene: Differentially Private Model fitting Using Genetic Algorithms. SIGMOD, 2013.

- J. Xu, Z. Zhang, X. Xiao, Y. Yang, G. Yu and M. Winslett. Differentially Private Histogram Publication. VLDB J., to appear.

- J. Zhang, Y. Tang, X. Xiao, Y. Yang, Z. Zhang and M. Winslett. An Iterative Algorithm for Graph De-Anonymization. WSDM, 2013, poster.

- S. Peng, Y. Yang, Zhang, Z., M. Winslett and Y. Yu. Query Optimization for Differentially Private Data Management Systems. ICDE, 2013.

- M. Winslett, Y. Yang and Z. Zhang. Demonstration of Damson: Differential Privacy for Analysis of Large Data. SC-BDA, 2012.

- G. Yuan, Z. Zhang, M. Winslett, X. Xiao, Y. Yang and Z. Hao. Low-Rank Mechanism: Optimizing Batch Queries under Differential Privacy. VLDB, 2012.

- J. Zhang, Z. Zhang, X. Xiao, Y. Yang and M. Winslett. Functional Mechanism: Regresssion Analysis under Differential Privacy, VLDB, 2012.

- S. Peng, Y. Yang, Z. Zhang, M. Winslett and Y. Yu. DP-Tree: Indexing Multi-Dimensional Data under Differential Privacy, SIGMOD, 2012, poster.

- Y. Yang, Z. Zhang, G. Miklau, M. Winslett and X. Xiao. Differential Privacy in Data Publication and Analysis. SIGMOD’12, tutorial.

- J. Xu, Z. Zhang, X. Xiao, Y. Yang and G. Yu. Differentially Private Histogram Publication. ICDE, 2012.

- Y. Li, Z. Zhang, M. Winslett and Y. Yang. Compressive Mechanism: Utilizing Sparse Representation in Differential Privacy. WPES, 2011.

- B. Ding, M. Winslett, J. Han and Z. Li, Differentially Private Data Cubes: Optimizing Noise Sources and Consistency. SIGMOD, 2011.

- X. Xiao, G. Bender, M. Hay, and J. Gehrke. iReduct: Differential Privacy with Reduced Relative Errors. SIGMOD, 2011.