Audio signal processing would seem to have nothing to do with the COVID-19 pandemic. It turns out, however, that a low-complexity signal processing algorithm used in hearing aids can also be used to monitor breathing for patients on certain types of ventilator.

To address the shortage of emergency ventilators caused by the pandemic, this spring the Grainger College of Engineering launched the Illinois RapidVent project to design an emergency ventilator that could be rapidly and inexpensively produced. In little more than a week, the team built a functional pressure-cycled pneumatic ventilator, which is now being manufactured by Belkin.

The Illinois RapidVent is powered by pressurized gas and has no electronic components, making it easy to produce and to use. However, it lacks many of the monitoring features found in advanced commercial ventilators. Without an alarm to indicate malfunctions, clinicians must constantly watch patients to make sure that they are still breathing. More-advanced ventilators also display information about pressure, respiratory rate, and air volume that can inform care decisions.

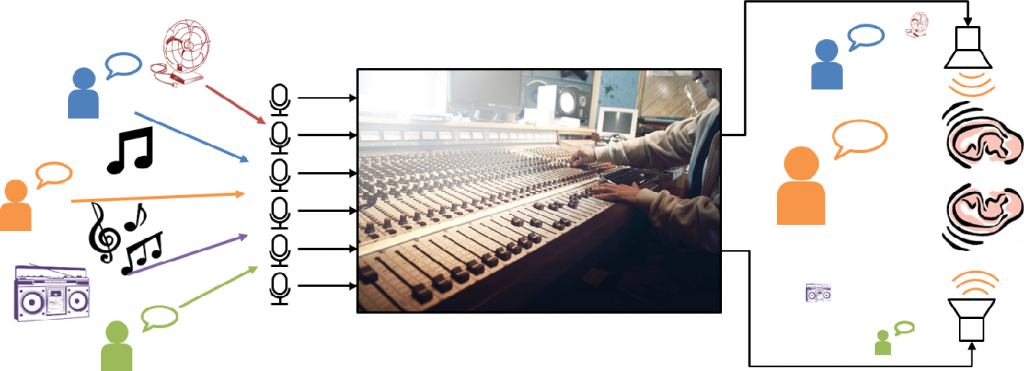

The Illinois RapidAlarm adds monitoring features to pressure-cycled ventilators.

To complement the ventilator, a team of electrical engineers worked with medical experts to design a sensor and alarm system known as the Illinois RapidAlarm. The device attaches to a pressure-cycled ventilator, such as the Illinois RapidVent, and monitors the breathing cycle. The device includes a pressure sensor, a microcontroller, a buzzer, three buttons, and a display. It shows clinically useful metrics and sounds an audible alarm when the ventilator stops working. The hardware design, firmware code, and documentation are available online with open-source licenses. A paper describing how the system works is available on arXiv.