This post describes our paper “Binaural Audio Source Remixing for Microphone Array Listening Devices” presented at ICASSP 2020. You can read the paper here or watch the video presentation here.

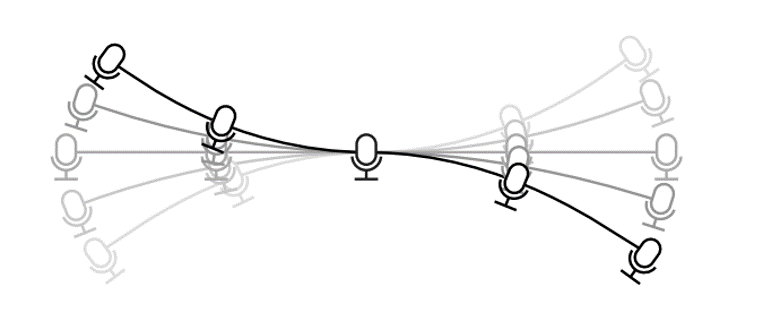

Microphone arrays are important tools for spatial sound processing. Traditionally, most methods for spatial sound capture can be classified as either beamforming, which tries to isolate a sound coming from a single direction, or source separation, which tries to split a recording of several sounds into its component parts. Beamforming and source separation are useful in crowded, noisy environments with many sound sources, and are widely used in speech recognition systems and teleconferencing systems.

Since microphone arrays are so useful in noisy environments, we would expect them to work well in hearing aids and other augmented listening applications. Researchers have been building microphone-array hearing aids for more than 30 years, and laboratory experiments have consistently shown that they can reduce noise and improve intelligibility, but there has never been a commercially successful listening device with a powerful microphone array. Why not?

The problem may be that most researchers have approached listening devices as if they were speech recognition or teleconferencing systems, designing beamformers that try to isolate a single sound and remove all the others. They promise to let the listener hear the person across from them in a crowded restaurant and silence everyone else. But unlike computers, humans are used to hearing multiple sounds at once, and our brains can do a good job separating sound sources on their own. Imagine seeing everyone’s lips move but not hearing any sound! If a listening device tries to focus on only one sound, it can seem unnatural to the listener and introduce distortion that makes it harder, not easier, to hear.

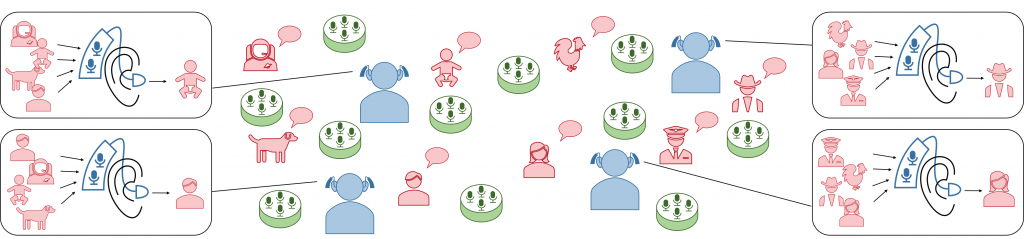

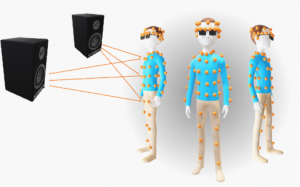

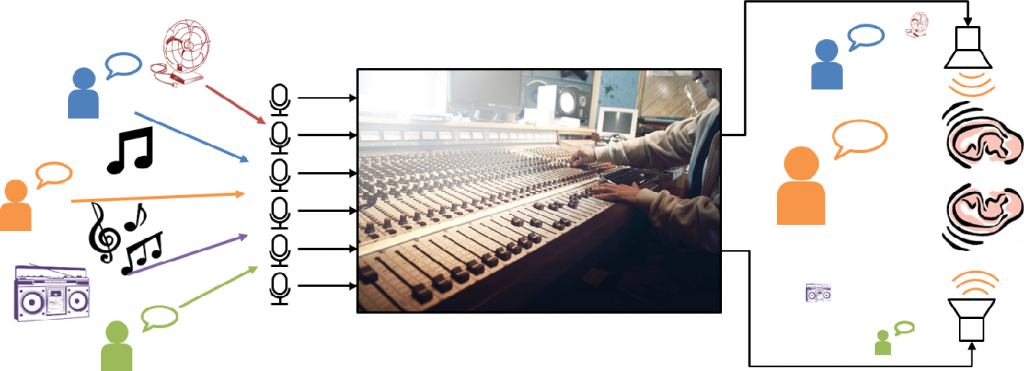

A source remixing system changes the relative levels of sounds in a mixture while preserving their spatial cues.

This paper proposes a new type of array processing for listening devices: source remixing. Instead of trying to isolate or separate sound sources, the system tries to change their relative levels in a way that sounds natural to the listener. In a good remixing system, it will seem as if real-world sounds are louder or quieter than before.