This post describes our paper “Mechatronic Generation of Datasets for Acoustics Research,” presented at the International Workshop on Acoustic Signal Enhancement (IWAENC) in September 2022.

Creating datasets is expensive, be it in terms of time or funding. This is especially true for spatial audio: Some applications require that hundreds of recordings are taken from specific regions in a room, while others involve arranging many microphones and loudspeakers to mimic real-life scenarios – for instance, a conference. Few researchers have access to dedicated recording spaces that can accurately portray acoustically-interesting environments, and fewer still are able to create dynamic scenes where microphones and speakers move precisely to replicate how people walk and talk.

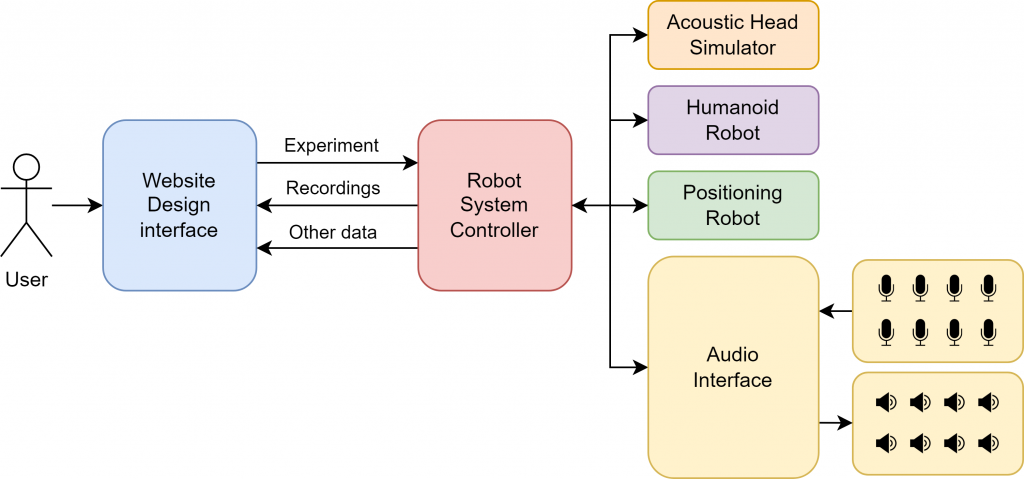

To support the creation of these types of datasets, we propose the Mechatronic Acoustic Research System, or MARS for short. We envision MARS as a robot-enabled recording space that researchers would have remote access to. Users could emulate a wide variety of acoustic environments and take recordings with little effort. Our initial concept is for a website design interface that can be used to specify a complicated experiment, which a robot system then automatically recreates.

How the MARS frontend and backend link together