Computer Science Research

Recent analyses of exascale systems emphasize that they will not simply be an extension of today’s petascale systems. Among the challenges faced by an exascale system are (1) the plateau in CMOS clock rates, requiring increased concurrency to provide more performance; (2) slower, simpler, and heterogeneous processing elements, with reduced total available memory that demands greater locality of memory references, in order to reduce power consumption, as moving data requires significant power; (3) the increased likelihood of faults caused by the reduction in feature size, increase in the number of components, and possible reduction in voltage that will require software strategies for resiliency; (4) scalability and performance irregularity, caused by the large number of compute elements and the likelihood that addressing some of the other challenges will lead to more adaptive solutions, such as dynamic frequency modification, that make performance less predictable; and (5) latency tolerance, as references to memory, both local and remote, take hundreds to thousands of cycles.

Recent analyses of exascale systems emphasize that they will not simply be an extension of today’s petascale systems. Among the challenges faced by an exascale system are (1) the plateau in CMOS clock rates, requiring increased concurrency to provide more performance; (2) slower, simpler, and heterogeneous processing elements, with reduced total available memory that demands greater locality of memory references, in order to reduce power consumption, as moving data requires significant power; (3) the increased likelihood of faults caused by the reduction in feature size, increase in the number of components, and possible reduction in voltage that will require software strategies for resiliency; (4) scalability and performance irregularity, caused by the large number of compute elements and the likelihood that addressing some of the other challenges will lead to more adaptive solutions, such as dynamic frequency modification, that make performance less predictable; and (5) latency tolerance, as references to memory, both local and remote, take hundreds to thousands of cycles.

To address these challenges, our team will work closely together, within a proven development framework, to enable predictive simulations with our code on current and forthcoming petascale and trans-petascale systems, providing a clear path toward exascale systems.

For concurrency, we need algorithms that expose multiple layers of parallelism to exploit and pro- gramming models that help us express it at all levels. For heterogeneity, we will use GPUs as a stand-in for future architectures that mix different types of functional units; the issues here include both the coordination and management of memory motion as well as the use of many lightweight functional units, possibly managed by more general cores. For locality, we must limit data motion between nodes (using parallel algorithms that are communication-efficient) and within nodes (using, for example, high-order, more memory-efficient methods with better at- tention to temporal locality in the memory hierarchy). For fault detection and resiliency, we need to enlist algorithms and programming models that help both in detecting faults and in providing methods of recovery. Fortunately, the following common ideas allow us to address these issues:

- Scalable numerical algorithms. Rethinking the algorithms provides natural hooks not only for scalability and concurrency but also for load balance, fault detection and recovery, and better memory locality.

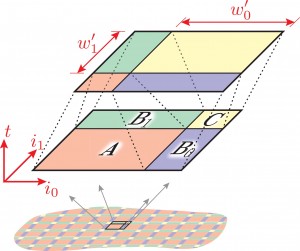

- Overdecomposition. The division of the data structures into more than one chunk per core (or processor) is a powerful approach that helps solve many problems. Even for current systems, this provides an efficient mechanism (as long as the overdecomposition is not extreme) for better memory locality (e.g., with hierarchical tiled arrays), latency hiding with heterogeneous processors (using multi-buffering to overlap transfers with ongoing computation), load balancing on complex multiprocessors, and for complex computations, such as those proposed.

- Adaptive computation. Our application will introduce transient performance needs that need to be accommodated, including temporal and spatial mesh refinement due to a plasma discharge and/or the presence of a thin flame.

Source-to-source transformations are used to maintain a productive programming model for the computational scientist while permitting the generation of code that can be highly optimized by standard compilers.

Though we focus our efforts on our own software infrastructure, which we have developed over years, we emphasize that these can inform the efforts any programming framework will require to perform at exascale.