by Libby Cave

If you are interested in data or relational databases, then you have heard of SQL. SQL, or Structured Query Language, is designed to handle structured data in order to assist in data query, data manipulation, data definition and data access control. It is a very user-friendly language to learn with a simple code structure and minimal use of special characters. Because of this, SQL is the industry standard for database management, and this is reflected in the job market as there is a strong demand for employees with SQL skills.

Enter SQL Murder Mystery

In an effort to promote the learning of this valuable language, Knight Labs, a specialized subsidiary of Northwestern University, created SQL Murder Mystery. Combining the known benefits of gamification and the popularity of whodunit detective work, SQL Murder Mystery aims to help SQL beginners become familiar with the language and have some fun with a normally dry subject. Players take on the role of a gumshoe detective tasked with solving a murder. The problem is you have misplaced the crime scene report and you now must dive into the police department’s database to find the clues. For true beginners with no experience, the website provides a walkthrough to help get players started. More experienced learners can jump right in and practice their skills.

I’m on the case!

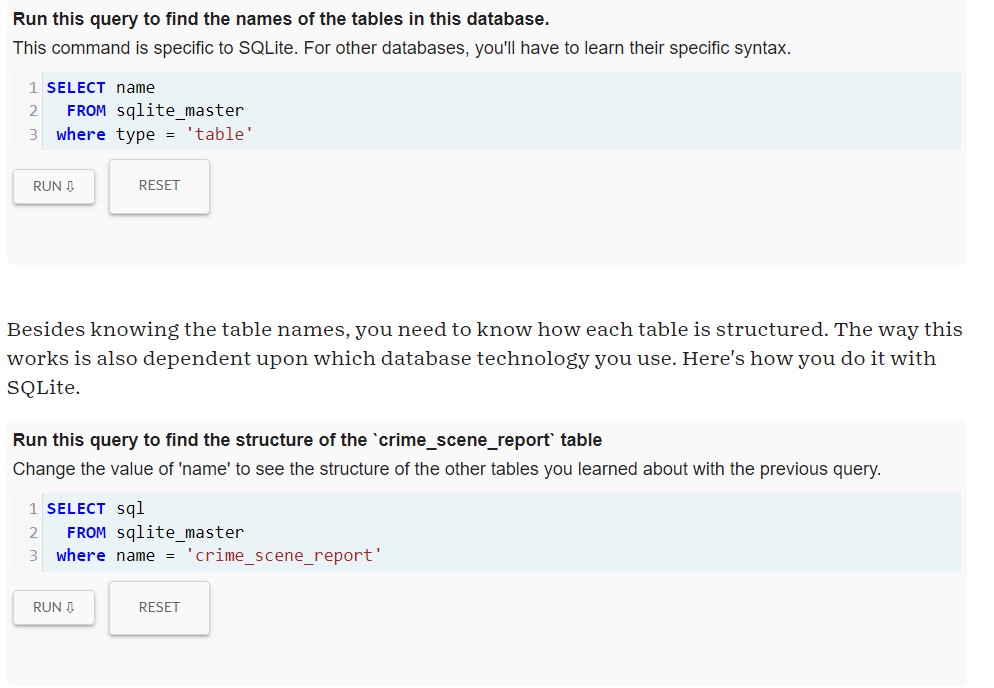

I have no experience with SQL but I am interested in database design and information retrieval, so I knew it was high time that I learn the basics. As a fan of both games and detective stories, SQL Murder Mystery seemed like a great place to start. Since I am a true beginner, I started with the walkthrough. As promised on the website, this walkthrough did not give me a complete, exhaustive introduction to SQL as a language, but instead gave me the tools needed to get started on the case. SQL as a language, relational databases and Entity Relationship Diagrams (ERD) were briefly explained in an approachable manner. In the walk through, I was introduced to vital SQL functions like “Select:, “Where”, wildcards, and “Between”. My one issue with the game was in the joining tables section. I learned later that the reason I was having issues was due to the tables each having columns with the same title, which is apparently a foundational SQL feature. The guide did not explain that this could be an issue and I had to do some digging on my own to find out how to fix it. It seems like the walkthrough should have anticipated this issue and mentioned it. That aside, By the end of the walkthrough, I could join tables, search for partial information matches, and search within ranges. With some common sense, the database’s ERD, and the new SQL coding skills, I was able to solve the crime! If users weren’t challenged enough with that task, there is an additional challenge that suggests users find the accomplice while only using 2 queries.

The Verdict is In

I really loved this game! It served as a great introduction to a language I had never used before but still managed to be really engaging. It reminded me of those escape room mystery boxes like Hunt a Killer that has users solve puzzles to get to a larger final solution. Anyone who loves logic puzzles or mysteries will enjoy this game, even if they have no experience with or even interest in coding or databases. If you have some free time and a desire to explore a new skill, you should absolutely give SQL Murder Mystery a try!