This post is part of a series where we introduce you to the various topics that we cover in the Scholarly Commons. Maybe you’re new to the field or you’re just to the point where you’re just too afraid to ask… Fear not! We are here to take it back to the basics!

So, what is Data Analysis, anyway?

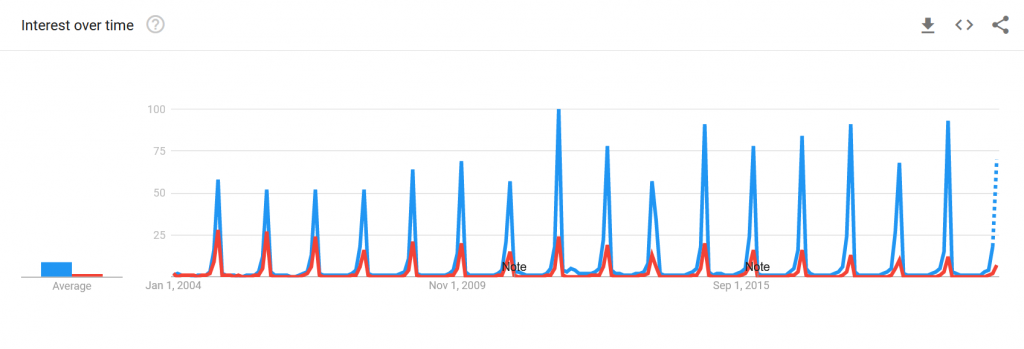

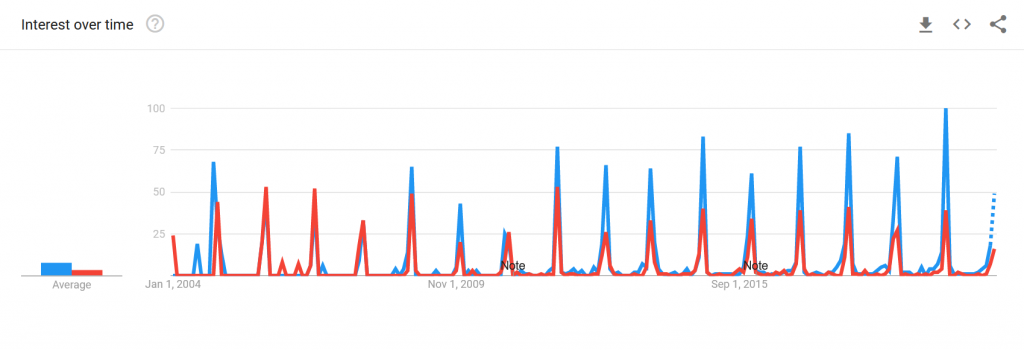

Data analysis is the process of examining, cleaning, transforming, and modeling data in order to make discoveries and, in many cases, support decision making. One key part of the data analysis process is separating the signal (meaningful information you are trying to discover) from the noise (random, meaningless variation) in the data.

The form and methods of data analysis can vary widely, and some form of data analysis is present in nearly every academic field. Here are some examples of data analysis projects:

- Taylor Arnold, Lauren Tilton, and Annie Berke in “Visual Style in Two Network Era Sitcoms” (2019) used large-scale facial recognition and image analysis to examine the centrality of characters in the 1960s sitcoms Bewitched and I Dream of Jeannie. They found that Samantha is the distinctive lead character of Bewitched, while Jeannie is positioned under the domination of Tony in I Dream of Jeannie.

- Allen Kim, Charuta Pethe, Steven Skiena in “What time is it? Temporal Analysis of Novels” (2020) used the full text of 52,183 fiction books from Project Gutenberg and the HaithiTrust to examine the time of day that events in the book took place during. They found that events from 11pm to 1am became more common after 1880, which the authors attribute to the invention of electric lighting.

- Wouter Haverals and Lindsey Geybels in “A digital inquiry into the age of the implied readership of the Harry Potter series” (2021) used various statistical methods to examine whether the Harry Potter books did in fact progressively become more mature and adult with successive books, as often believed by literature scholars and reviewers. While they did find that the text of the books implied a more advanced reader with later books, the change was perhaps not as large as would be expected.

How can Scholarly Commons help?

If all of this is new to you, don’t worry! The Scholarly Commons can help you get started.

Here are various aspects of our data services in the Scholarly Commons:

- Data Discovery: the process of locating the data sets (collections of data) you want to use in your research project. If you are trying to locate data, contact Carissa Phillips, Data Discovery and Business Librarian, for assistance.

- Data Purchase Program: Not all data sets are freely available online. As a result, the Illinois Library has a data purchase program to help faculty, graduate students, and academic professionals obtain the data they need. Our webpage on the data purchase program has more details.

- Data Management: how you store and work with your data in your research project and make it available to other scholars. Research Data Services of the Illinois Library provides support for all your data management needs.

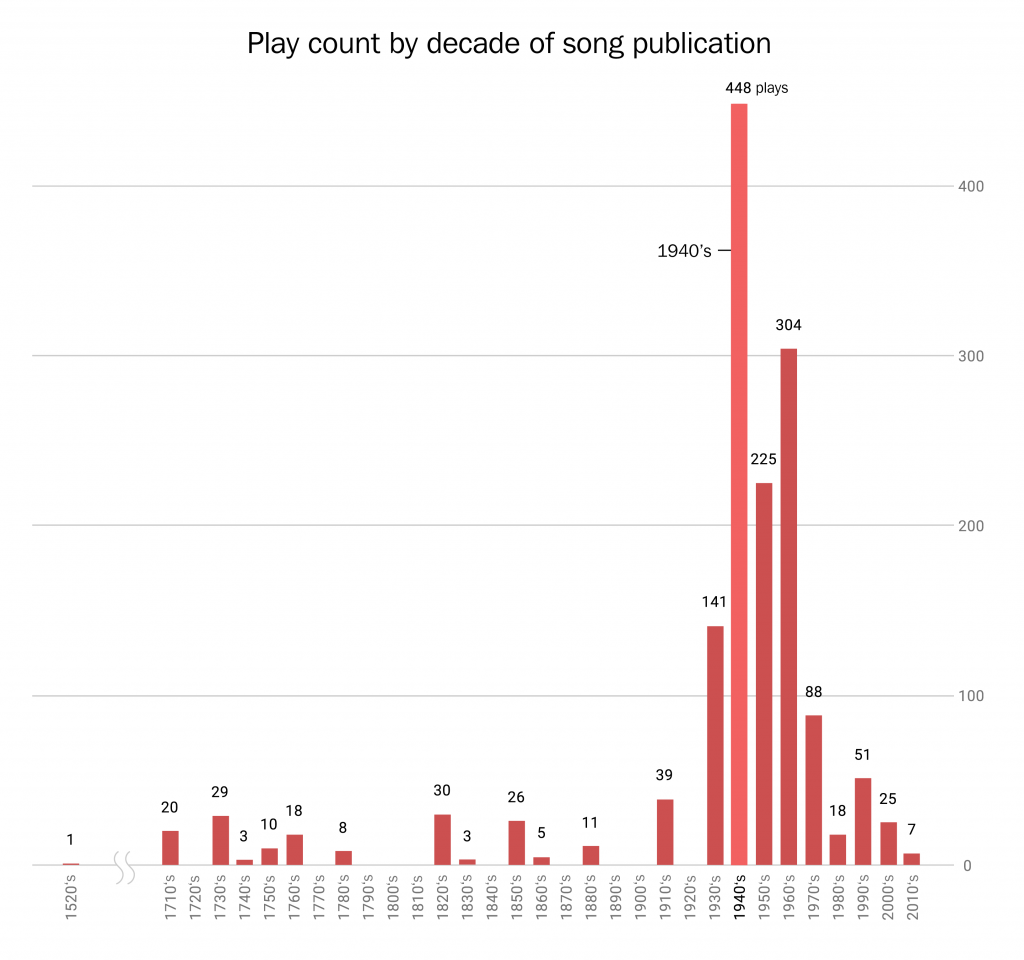

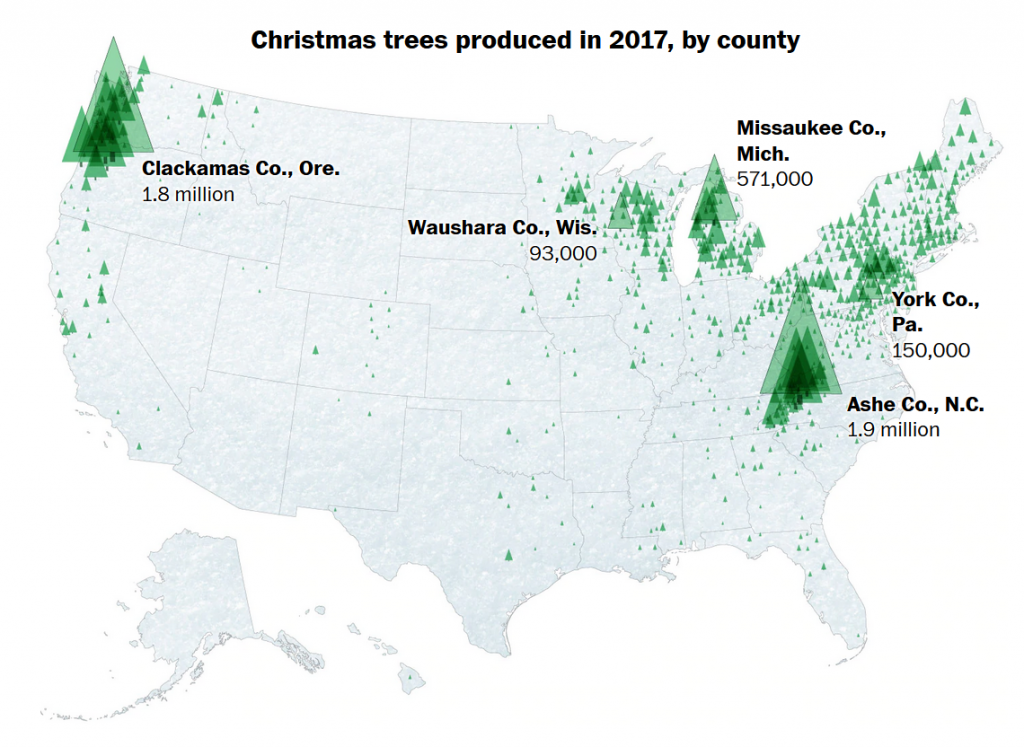

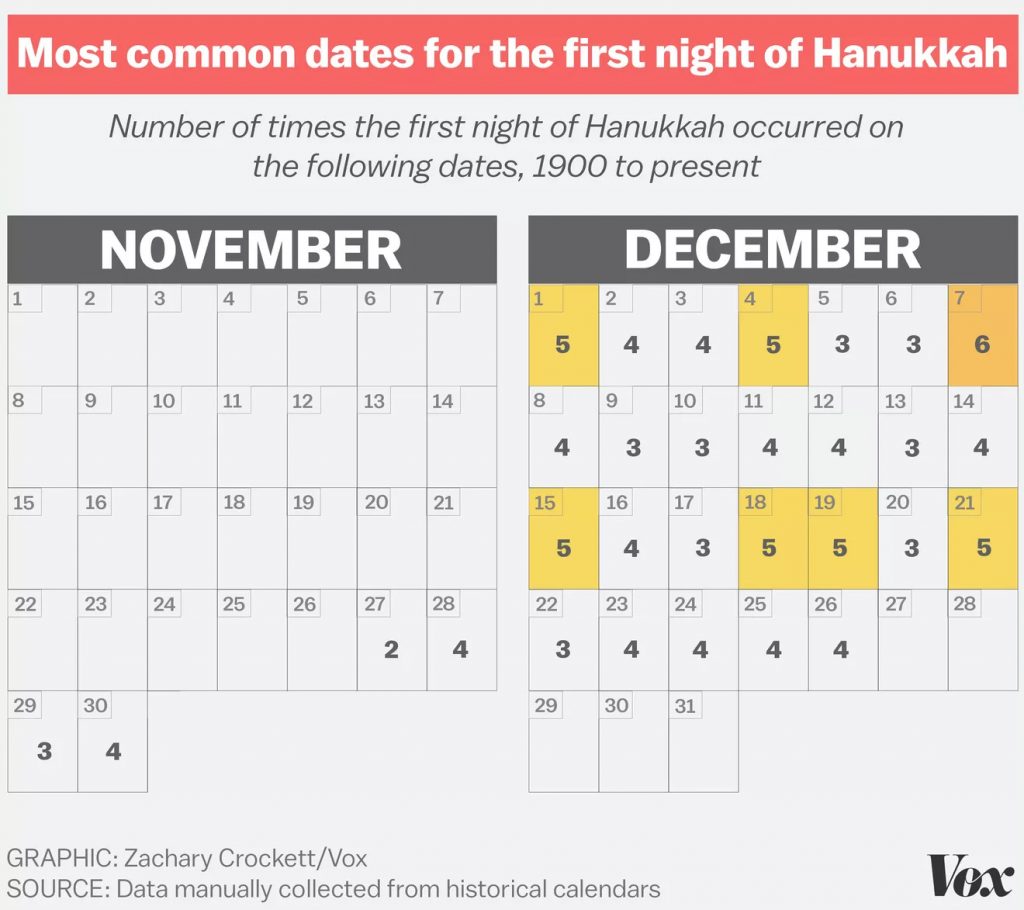

- Data Visualization: the graphic representation of data, created to make patterns in the data visible. Check out our page for Data Visualization for more resources.

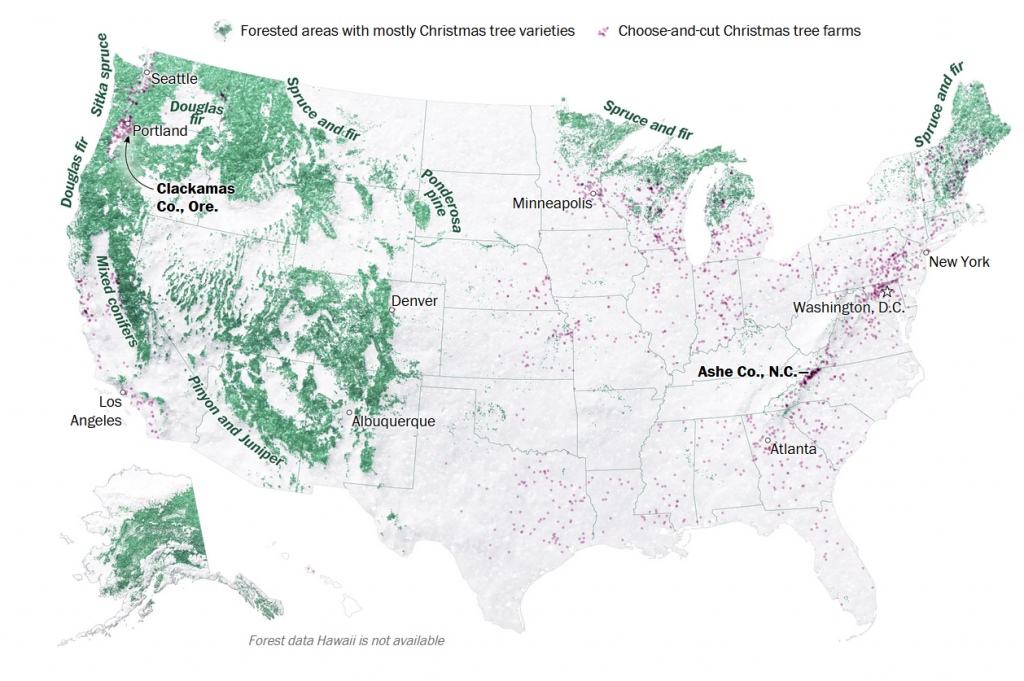

- Geographic Information Systems (GIS): a specific type of data analysis for working with spatial data. We have a separate blog post with its own introduction to GIS.

- Statistical Consulting: whether you are doing quantitative data analysis (number-based data) or qualitative data analysis (text-based data), the Scholarly Commons collaborates with the Center for Innovation in Teaching and Learning (CITL) to offer Statistical Consulting. Check out our webpage for more information.

- Software Available: The computers in Scholarly Commons Room 220 have several data analysis programs available: including R, Stata, SPSS and SAS. Check out our website for a complete list of software available in Room 220.

As always, if you’re interested in learning more about data analysis and how to support your own projects you can fill out a consultation request form, attend a Savvy Researcher Workshop, Live Chat with us on Ask a Librarian, or send us an email. We are always happy to help!